Artigo arquivado! É somente leitura

How to Locate Dependable Proxies for Online Data Extraction

6 de Abril de 2025, 11:13 - sem comentários ainda | Ninguém está seguindo este artigo ainda.In this world of web scraping, having reliable proxies is an vital component for achieving successful data extraction. These proxies can help safeguard your identity, prevent throttling by servers, and allow you to access restricted data. However, finding the right proxy solutions can often feel overwhelming, as there are countless options available online. This guide is designed to help you navigate the complex landscape of proxies and find the most effective ones for your web scraping needs.

As you searching for proxies, you'll come across terms like proxy scrapers, proxy checkers, and proxy lists. These tools are vital in sourcing high-quality proxies and guaranteeing they perform optimally for your specific tasks. Whether you are looking for free options or considering paid services, understanding how to assess proxy speed, anonymity, and reliability will significantly improve your web scraping projects. Come along as we delve into the best practices for finding and making use of proxies effectively, guaranteeing your data scraping endeavors are both efficient and successful.

Grasping Proxies for Data Extraction

Intermediaries play a crucial role in web scraping by serving as intermediaries between the scraper and the websites being scraped. When you use a proxy, your requests to a website come from the proxy IP address instead of your personal. This aids to hide your true IP and prevents the website from blocking you due to abnormal traffic patterns. By leveraging proxies, data collectors can retrieve data without showing their real IPs, allowing for more extensive and effective data extraction.

Different types of proxies serve different roles in web scraping. HTTP proxy servers are suitable for standard web traffic, while SOCKS proxy servers can handle a larger variety of traffic types including TCP, UDP, and other protocols. The decision between utilizing HTTP or SOCKS proxies depends on the specific needs of your data extraction project, such as the type of protocols involved and the level of anonymity required. Additionally, proxies can be grouped as either public or private, with private proxies generally offering better speed and reliability for scraping jobs.

To ensure successful data extraction, it is essential to find premium proxies. Reliable proxies not only maintain rapid response times but also provide a level of disguise that can protect your data collection tasks. Using a proxy verification tool can help you determine the performance and anonymity of the proxies you intend to use. Ultimately, comprehending how to properly leverage proxies can greatly enhance your web scraping activities, improving both efficiency and overall success.

Types of Proxies: HTTP

When it comes to web scraping, understanding the different types of proxies is essential for effective data collection. HTTP proxy servers are intended for processing web traffic and are often used for accessing websites or gathering web content. They perform well for standard HTTP requests and can be advantageous in web scraping when working with websites. Nonetheless, how to scrape proxies for free may face challenges with managing non-HTTP traffic.

SOCKS4 proxies provide a more versatile option for web scraping, as they can handle any type of traffic, whether it is TCP or UDP. This flexibility allows users to circumvent restrictions and access multiple online resources. However, SOCKS4 does not support login verification or process IPv6 traffic, which may limit its applicability in certain situations.

The SOCKS5 server is the most advanced type, offering enhanced security and performance. It supports both TCP and UDP, allows for authentication, and can process IPv6 addresses. This makes SOCKS5 proxies an outstanding choice for web scraping, particularly when confidentiality and secrecy are a concern. Their capacity to tunnel different types of data adds to their effectiveness in fetching data from multiple sources securely.

Identifying Trustworthy Proxy Providers

When looking for trustworthy proxy sources to use in scraping the web, it's essential to start with well-known suppliers which have a established reputation. Many individuals select for paid services which offer exclusive proxies, that tend to be greater in stability and quicker compared to complimentary alternatives. Such providers often have a good standing for server uptime and support, ensuring that users receive reliable results. Looking into user reviews and community recommendations can help locate trustworthy sources.

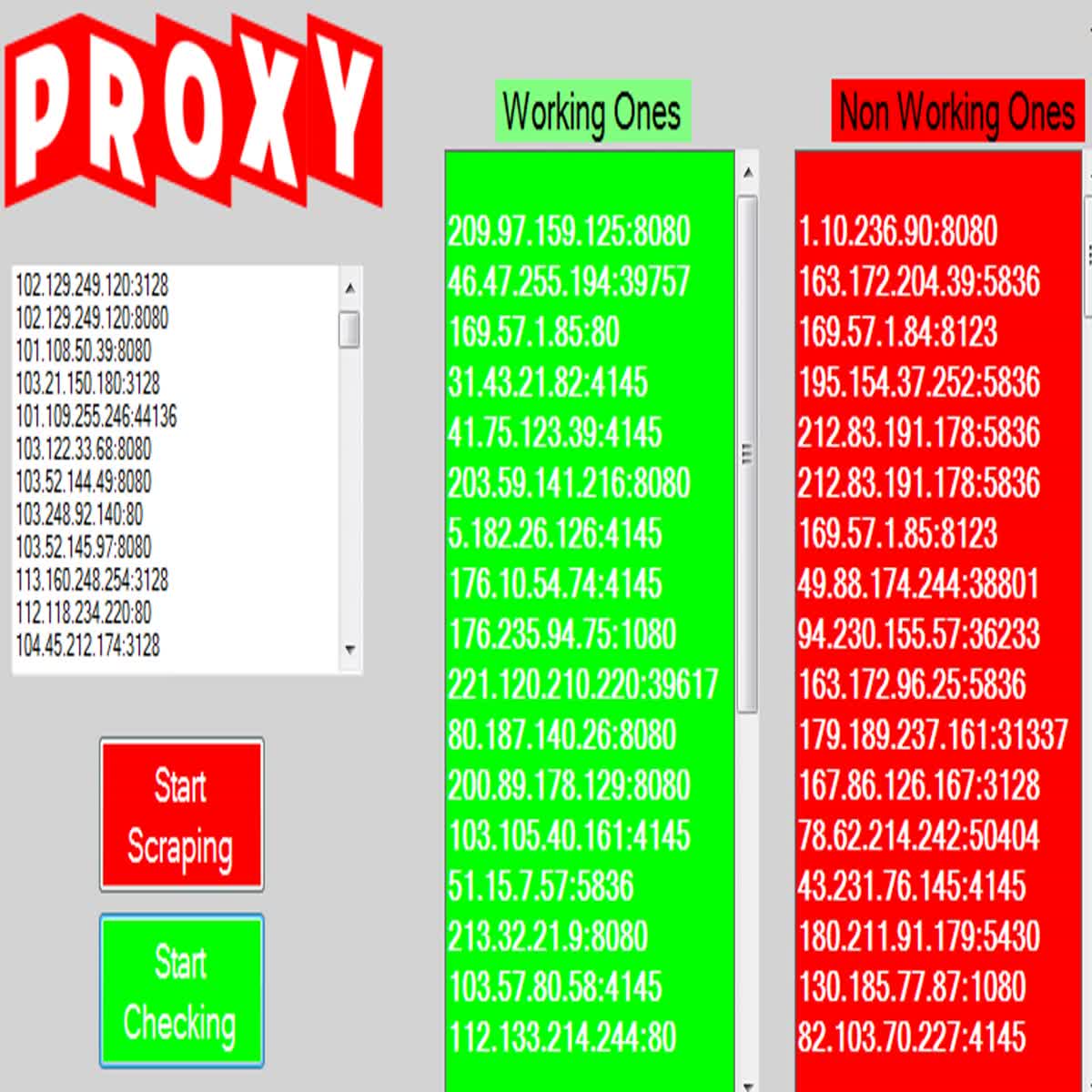

An additional approach is to use proxy lists and tools which gather proxies across various websites. Although there are the quality can vary significantly. To ensure that proxies are dependable, consider using a proxy checker to test their velocity and security. Focus on sources which frequently refresh their lists, as this can help you obtain up-to-date and functioning proxies, which is crucial for supporting effectiveness in web scraping activities.

In conclusion, it's important to separate between HTTP and SOCKS proxies. Hypertext Transfer Protocol proxies are usually used for web browsing, whereas Socket Secure proxies are flexible and can process multiple types of connections. If data scraping projects require enhanced functionality, investing in a SOCKS proxy may be helpful. Additionally, grasping the distinction between public and private proxies can inform your decision. Free proxies are generally no-cost but can be less secure and not as fast; whereas paid proxies, while not free, offer superior efficiency and security for data scraping requirements.

Web Scrapers: Utilities and Strategies

Regarding web scraping, possessing the appropriate tools is important for quickly acquiring data across the internet. Proxy scrapers are specifically designed to simplify the task of gathering proxy lists from diverse sources. These tools can save time and ensure you have a variety of proxies available for your scraping tasks. Using a no-cost proxy scraper can be an outstanding way to begin, but it's important to determine the standard and dependability of the proxies acquired.

For those looking to ensure the effectiveness of their scraping endeavors, employing a speedy proxy scraper that can swiftly verify the performance of proxies is necessary. Tools like ProxyStorm offer advanced features that help users filter proxies based on specifications such as velocity, level of anonymity, and type (HTTP or SOCKS). By using these types of proxy checkers, scrapers can simplify much of the verification, allowing for a more streamlined data extraction method.

Understanding the difference between HTTP, SOCKS4, and SOCKS5 proxies is also important. Each type serves different purposes, with SOCKS5 offering more versatility and supporting a broader variety of protocols. When picking tools for proxy scraping, think about using a proxy verification tool that helps determine proxy speed and anonymity. This confirms that the proxies you use are not only functional but also appropriate for your specific web scraping needs, whether you are working on SEO tasks or automated data extraction projects.

Assessing and Confirming Proxy Anonymity

When utilizing proxies for web scraping, it is crucial to verify that your proxy servers are genuinely anonymous. Assessing proxy privacy entails checking whether or not your real IP address is revealed while connected to the proxy. This can be done using online services designed to disclose your current IP address ahead of and after connecting through the proxy. A dependable anonymous proxy should hide your actual IP, revealing only the proxy's IP address to any external sites you visit.

To check the level of anonymity, you can categorize proxies into 3 types: transparent, semi-anonymous, and high anonymity. Clear proxies do not tend to hide your IP and can be readily discovered, while anonymous proxies give some level of IP concealment but may still reveal they are proxies. Elite proxies, on the other hand, provide complete masking, seeming as if they are regular users. Using a proxy checker tool allows you to figure out which type of proxy you are dealing with and to ensure you are utilizing the best option for protected scraping.

Regularly testing and confirming the privacy of your proxy servers not only safeguards your identity but also improves the efficiency of your web scraping activities. Tools such as Proxy Service or specialized proxy testing tools can facilitate this process, saving you resources and confirming that you are reliably utilizing high-quality proxy servers. Utilize these services to maintain a robust and anonymous web scraping operation.

Free Proxies: Which to Select?

When it comes to choosing proxies for web scraping, one of the key decisions you'll face is whether to use complimentary or paid proxies. Free proxies are easily accessible and budget-friendly, making them an appealing option for those on a tight budget or casual scrapers. However, the dependability and speed of free proxies can be unreliable. They often come with limitations such as lagging connections, limited anonymity, and a higher likelihood of being denied access by target websites, which can impede the effectiveness of your extraction efforts.

On the other hand, premium proxies generally provide a more reliable and safe solution. They often come with features like exclusive IP addresses, improved speed, and better anonymity. Paid proxy services frequently offer strong customer support, aiding you troubleshoot any problems that arise during your scraping tasks. Moreover, these proxies are less likely to be blacklisted, ensuring a smoother and more effective scraping process, especially for bigger and more complex projects.

Ultimately, the decision between free and paid proxies will depend on your specific needs. If you are conducting small-scale scraping and can tolerate some interruptions or reduced speeds, complimentary proxies may be sufficient. However, if your scraping operations require speed, reliability, and anonymity, opting for a paid proxy service could be the best option for achieving your goals effectively.

Best Guidelines for Utilizing Proxies in Automated Tasks

When using proxies for automation, it's crucial to change them consistently to minimize detection and bans by specific websites. Implementing a proxy rotation approach can aid distribute requests across various proxies, lessening the likelihood of reaching speed restrictions or activating protection measures. You can achieve this by utilizing a proxy list creator via the web that provides a diverse set of proxy options or adding a speedy proxy harvester that can retrieve new proxies often.

Evaluating the dependability and speed of your proxies is just as important. Employ a proxy verifier to confirm their effectiveness before you start data extraction. Tools that give detailed data on response time and return times will enable you to find the most efficient proxies for your automated tasks. Additionally, it's essential to monitor your proxies continuously. A proxy validation tool should be part of your routine to make sure you are always using top-notch proxies that stay active.

Finally, understand the distinctions between dedicated and public proxies when setting up your automation. Although free proxies can be cost-effective, they often miss the dependability and security of dedicated options. If your automated projects require reliable and protected connections, investing in private proxies may be worthwhile. Evaluate your individual needs on proxy anonymity and speed to pick the best proxies for data extraction and guarantee your operations function seamlessly.

0sem comentários ainda