Artigo arquivado! É somente leitura

Steps: How to Test if a Proxy works Correct

6 de Abril de 2025, 9:00 - sem comentários ainda | Ninguém está seguindo este artigo ainda.In the current virtual world, the requirement for privacy and data protection has prompted many individuals to delve into the world of proxy servers. Whether you are conducting web scraping, managing SEO tasks, or simply looking to protect your online activities, understanding how to verify if a proxy server is operational is important. Proxies act as bridges between your system and the internet, enabling you to hide your IP number and access information that may be restricted in your area. But, difference between HTTP SOCKS4 and SOCKS5 proxies are the same, and a faulty proxy server can obstruct your efforts and lead to annoyances.

This article will take you through a step-by-step process to ensure that your proxy servers are operational. We will cover various tools and methods, including proxy scrapers and testing tools, to help you locate, verify, and evaluate your proxy setup. Additionally, we will examine important concepts such as the variances between HTTP, SOCKS4, and SOCKS5 proxies, and how to determine the performance and privacy of your proxy servers. By the end of this article, you'll be fully prepared with the knowledge to efficiently manage your proxy usage for web scraping, automated tasks, and more.

Comprehending Proxy Servers

Proxy servers act as go-betweens between users and the web, allowing for increased confidentiality and safety. Whenever you link to the internet through a proxy, your requests are routed through the proxy, which hides your IP address. This makes it harder for websites and online services to monitor your browsing activity, providing a layer of anonymity essential for various internet activities.

There are various types of proxy servers, including HTTP, SOCKS Version 4, and SOCKS Version 5, each serving unique purposes. HyperText Transfer Protocol proxies are generally used for web traffic and are best for general browsing, while SOCKS support a wider range of protocols, making them appropriate for applications like sharing files and online gaming. Knowing the distinctions between these kinds aids in choosing the appropriate proxy for specific requirements.

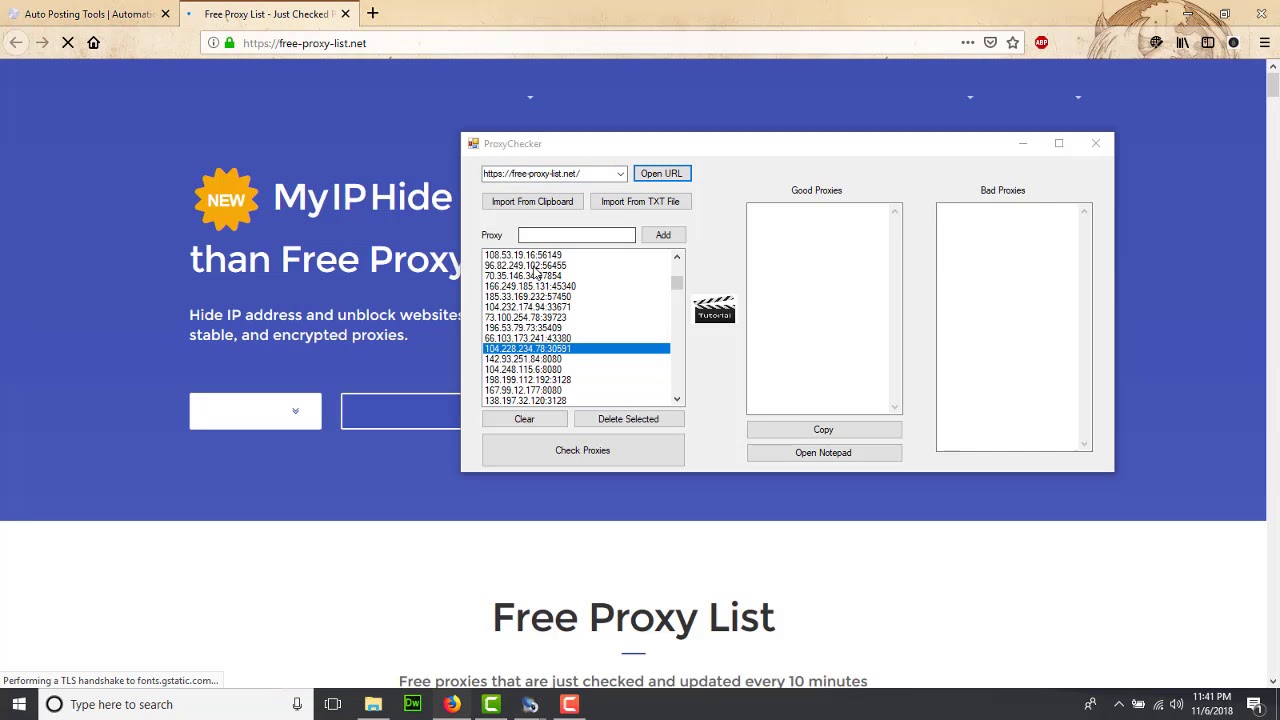

Using proxy servers effectively demands comprehending how to check if they are working correctly. This requires using a proxy checker to evaluate their performance, anonymity, and dependability. With numerous options accessible, including quick scraping tools and dedicated proxy verification tools, individuals can ensure they are using top-notch proxy servers for activities such as data scraping, automation, and data extraction.

Overview of Proxy Server Data Harvesting Tools

Proxy collection software are essential for individuals looking to gather and validate proxies for various online operations, such as content harvesting and process automation. These tools permit users to compile a list of accessible proxies from different sources, securing a steady flow of proxy IPs for their work. With the rising need for security online, a reliable proxy scraper can greatly enhance the process of acquiring operational proxies.

One of the most significant pros of using proxy scraping tools is their ability to screen and organize proxies based on particular factors, such as speed, anonymity level, and type (HTTP protocol, SOCKS version 4, SOCKS5). For example given, a high-speed proxy scraper can help find quick proxies suitable for time-sensitive activities, while a proxy verifier can check the functionality and reliability of each collected proxy. This feature is vital for professionals who rely on proxies for web scraping or data extraction, as it directly affects their results.

In the past few years, the field of proxy scraping tools has changed, offering numerous options for both newcomers and advanced users. Complimentary proxy scrapers are available for those with restrictive budgets, while premium tools provide improved features and support for users willing to purchase advanced solutions. As the need for proxies continues to increase, staying informed with the best proxy sources and tools is essential for efficient online operations.

Methods to Collect Proxy Servers at Free

Gathering proxy servers at free is a viable approach to get premium proxy lists without incurring costs. A of the most straightforward ways to do this involves use popular websites offering free proxy lists. A lot of these sites update regularly and provide details on the type of proxies, speed, and privacy. By exploring these sites, you can assemble a range of proxies to test for functionality later.

A different approach to collect proxy servers is through using automation tools or libraries that automate the process. For example, Python has several libraries such as BeautifulSoup and Scrapy framework, which can be configured to extract data on proxies from targeted websites. By writing a simple script to request content from proxy-listing websites, you can collect and organize a list of valid proxies in a matter of minutes, enabling a scalable solution to gather proxies efficiently.

It is crucial to validate the proxies you gather to make sure they are functional. Post-scraping, use a proxy testing tool to test the proxies for their availability, speed, and anonymity. This process is important to filter out non-functional proxy servers from your list and to concentrate on those that deliver the optimal performance for your needs. By frequently collecting and validating proxies, you can maintain a strong and reliable proxy list for your web scraping needs and automation tasks.

Assessing Proxy Anonymity and Performance

While utilizing proxy servers, evaluating their privacy and performance is crucial for successful web scraping & automated processes. Privacy levels can vary significantly based on the type of proxy being employed, including HTTP, SOCKS4, or SOCKS 5. To find out how private a proxy is, you can utilize web-based resources that reveal your IP address. If the resource shows your actual IP, the proxy is probably a transparent one. If it reveals a different IP, you have a higher anonymity level, however it is important to test further to classify it as high-quality or anonymous.

Assessing proxy speed involves checking response time and latency rates. High-quality proxies will have low latency & quick response times, making them suitable for activities that require speed, like automatically conducted scraping. One way to evaluate performance is by employing a proxy verification tool that tests the proxy server and provides data on its efficiency. Moreover, you can run basic HTTP requests through the proxy & time the time taken to obtain a reply. This allows you to compare various proxies & identify the quickest ones.

Testing both anonymity & performance should be an continuous process, especially when extracting data over time. The quality of proxies quality can vary over time due to multiple variables, including server load & network changes. Regularly using HTTP proxy scraper ensures that you keep a proper proxy list for your requirements. By merging both tests, you can effectively remove poor-quality proxies, ensuring maximum performance for your internet scraping & automated processes activities.

Selecting the Top Proxy Sources

Regarding finding the best proxy providers, it is important to take into account your particular needs and applications. Some proxies are better suited for scraping web content, while others may perform well for tasks such as automation and browsing. Look for services that supply a dependable mix of both types of HTTP and SOCKS proxies, as this will enhance your capability in leveraging various tasks. Verify that the source you opt for has a reputation for excellent service and user satisfaction.

One more important aspect is the regional diversity of the proxy options. If your data extraction or automation tasks demand access to geography-specific content, you should favor providers that offer proxies from diverse countries and regions. This will enable you navigate geolocation barriers effectively and guarantee that your data extraction efforts yield the intended results without being restricted. Always remember to verify the legitimacy of the proxy provider to prevent any complications such as IP bans or low-speed connections.

In conclusion, consider the payment options of the services. A few providers provide free proxies while some may have subscription plans. Free proxies can be enticing, but they often come with limitations such as speed and consistency. Subscription-based proxies typically provide enhanced performance, confidentiality, and customer assistance. Review your expenses and weigh the benefits of free versus premium options before deciding, as investing in superior proxies can significantly improve your chance of success in web scraping and automation tasks.

Employing Proxies in Web Scraping

Data scraping is a powerful technique to collecting data from internet sites, but it often comes with challenges, particularly when it comes to accessing data without being blocked. This is where proxy servers come into focus. A proxy serves as an intermediary between your web scraper and the site you want to scrape, allowing you to make requests without revealing your actual IP address. This anonymity helps to prevent IP bans and ensures that your scraping efforts can carry on smoothly.

When deciding on proxies for web scraping, it's crucial to think about the kind of proxy that most fits your objectives. HTTP proxies are frequently used for scraping web pages, while SOCKS proxies offer more versatility and can handle different types of traffic. Additionally, the quickness and reliability of the proxies are vital, as unstable connections can affect your scraping performance. Employing a proxy validation tool can help you confirm the performance and speed of your proxy list before initiating large scraping tasks.

Additionally, the moral implications of web scraping should not be ignored. It's important to adhere to the conditions of use of the sites from which you are scraping data. High-quality proxies can help in this point by allowing you to spread your requests across different IP addresses, lowering the probability of being marked as questionable. By strategically using HTTP proxy scraper , you can enhance your web scraping capabilities while following best practices.

Frequent Problems and Solution Strategies

When using a proxy, one frequent problem that arises is failure to connect. This can occur for several causes, such as the proxy being down, mistyped proxy details, or internet limitations. To resolve it, first ensure that the server address and port number are correctly configured in your software. If the configuration are accurate, check the status of the server to see if it is functional. You can use a trusted proxy status checker to verify the condition of the proxy.

Another issue users often encounter is lagging performance. If your proxy is not responding quickly, the problem could stem from the proxy server being overloaded or simply not being located close to your site. To boost speed, consider testing different proxy servers and using a speedy proxy finder to find faster proxies. Additionally, if using a complimentary proxy, be aware that these tend to have slower speeds compared to premium proxies.

Anonymity challenges can also happen, where the proxy may not be adequately hiding your IP address. To check your anonymity, use a dependable privacy verification tool that checks whether your true IP address is being exposed. If the server is found to be transparent or offers weak anonymity, it may be best to move to a more reliable or secure proxy option. For data extraction and automation processes, ensuring you have a high-quality proxy is essential for both performance and security.

0sem comentários ainda