Discovering the Best Tools for Collecting Complimentary Proxy Servers

6 de Abril de 2025, 10:31 - sem comentários aindaWithin the current digital landscape, harvesting data has become an integral practice for companies, researchers, and developers alike. Nevertheless, one significant challenge that arises in the scraping process is the need for dependable proxies. Regardless of whether you are gathering data from sites, streamlining tasks, or performing market research, using complimentary proxies can offer a cost-effective solution. The appropriate tools can simplify the process of finding and verifying these proxies, ensuring that you can access the information you need without restrictions.

This article intends to unveil the best tools for scraping free proxies, examining various options such as proxy harvesters and checkers, and detailing how to effectively gather and verify proxy lists. From grasping the differences between HTTP and SOCKS proxies to identifying high-quality sources, we will provide a comprehensive guide that enables you to enhance your scraping capabilities effectively. Join us as we navigate the world of complimentary proxies and discover the best strategies for maximizing their potential in your projects.

Grasping Proxy Server Categories

Proxies are vital instruments for multiple online operations, notably for data extraction and automation. They function as go-betweens between a individual's computer and the internet, permitting users to transmit inquiries without exposing their true internet protocol addresses. There are various categories of proxies, each serving specific roles. The most common categories are HTTP, HTTP Secure, and Socket Secure proxy servers, with each having its specific set of capabilities and scenarios.

HTTP proxies are built mainly for web traffic and can proficiently handle regular web requests. They are often used for activities like routine web browsing and scraping online pages that do not demand secure transmissions. HTTP Secure proxies, on the flip side, provide an extra level of security by securing the information sent between the user and the intended website. This type is particularly important when managing sensitive information or when anonymity is a priority.

SOCKS proxies offer greater capability compared to Hypertext Transfer Protocol and HTTPS proxies. They work at a deeper network level, handling multiple types of traffic, including Hypertext Transfer Protocol, FTP, and even torrent traffic. Socket Secure version 4 and SOCKS5 are the two popular versions in this class, with SOCKS5 supporting sophisticated capabilities like authentication and User Datagram Protocol links. Selecting the right kind of proxy server is determined on the exact demands of the operation at play, such as speed, concealment, and suitability with the targeted functions.

Leading Proxy Collection Solutions

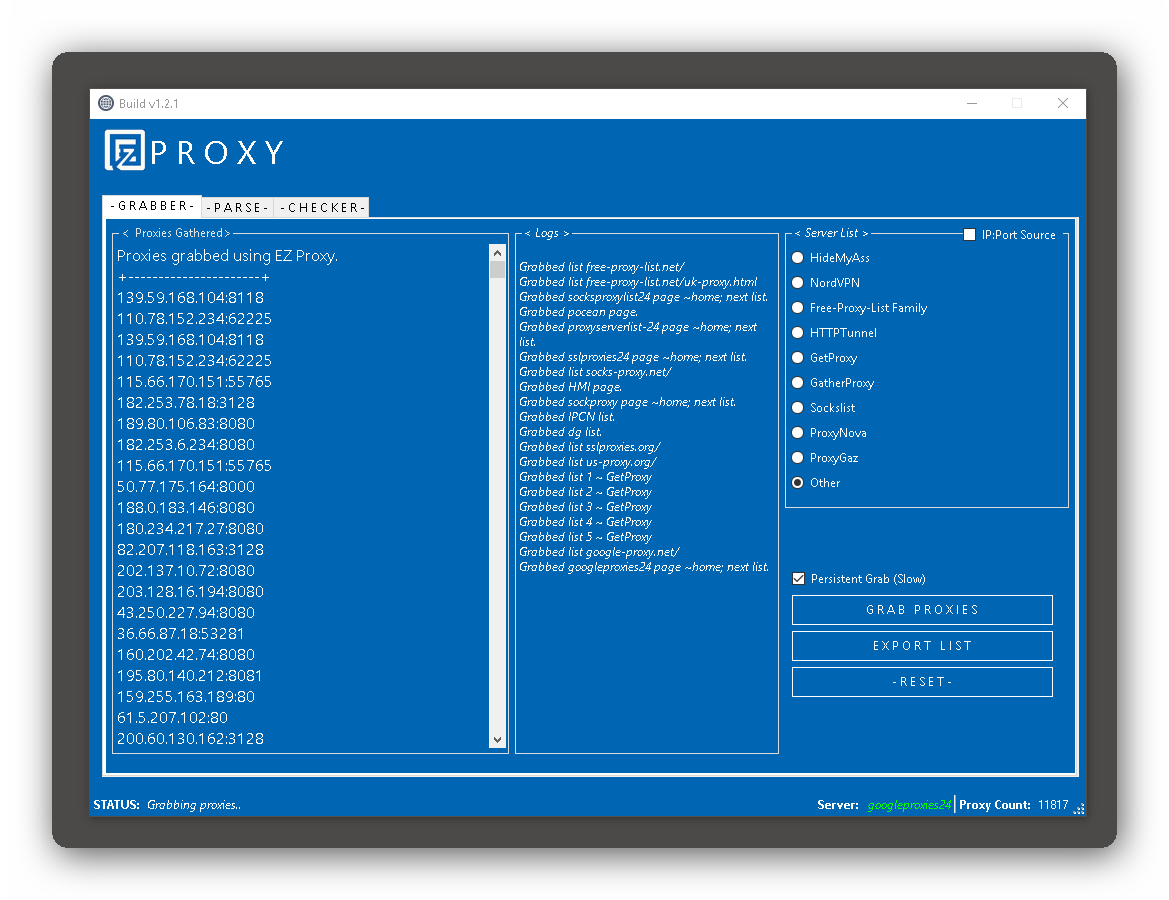

In the realm of collecting free proxies, utilizing the appropriate tools is vital for efficient scraping. An excellent option is ProxyStorm, a straightforward application that lets you extract a wide range of proxies quickly. Thanks to its ability to locate servers across multiple websites, ProxyStorm streamlines the task of gathering proxies that are suitable for a variety of purposes, whether for web navigation or web scraping tasks. Its user-friendly interface makes it accessible even for those who may aren't tech-savvy.

Additionally, another tool is a fast proxy scraper that not just collects proxies but also filters them based on speed and anonymity levels. These scrapers operate effectively to provide users with premium proxies that enhance the web scraping process. The best free proxy checker in the year 2025 will possibly be part of these tools, assisting users to quickly verify the status and capability of the proxies they've gathered. This capability is crucial to making sure that scraping projects are both secure and successful.

If you're using Python, proxy scraping libraries can significantly simplify the process. These libraries enable users to via code retrieve and check proxies, besides providing methods to assess proxy velocity and trustworthiness. Employing a proxy scraper for web scraping alongside a robust proxy verification tool offers a effective solution for automating data extraction tasks. Combining these tools, users can improve their scraping operations with little hassle.

How to Scrape Complimentary Proxies

To gather public proxies successfully, you need to locate trustworthy sources that periodically publish current proxy list s. Sites and forums focused on proxy sharing are valuable resources where you can find new proxies. Some well-known platforms include specialized proxy-sharing forums, and even GitHub projects where developers may upload their discovery. Keep in mind that the standard of the proxies can vary, so it's important to assess the source's trustworthiness before continuing.

Once you've gathered potential proxy sources, the next step is to employ a proxy scraper tool. A good proxy scraper should be capable of moving through web pages to retrieve the proxy information automatically. Some scrapers have included features that enable you sift through proxies by type, such as HTTPS or SOCKS5, which simplifies to compile a customized list that meets your requirements. Fast proxy scrapers that can quickly parse several sources will free up you effort and help you have an current list.

Following gathering proxies, it's crucial to test them for functionality and anonymity. A robust proxy checker will help you establish whether the proxies are functional and evaluate their performance. Checking for anonymity is also essential, particularly if you're using these proxies for web scraping. By making sure that your proxies are trustworthy and quick, you can enhance your web scraping experience, preventing issues related to slowdown or blocks due to problematic proxies.

Proxy Verification Methods

When working with proxy lists, it's crucial to verify their performance before including them into your processes. Several methods can be employed to check if a proxy server is functional. One frequent approach is to route requests through the proxy server to a reliable web address and check the results. A valid response demonstrates that the proxy is operational, while any errors or delays may imply issues that need to be addressed. This approach is straightforward and can often be facilitated in a code or a specialized verification tool.

Another useful technique involves assessing the privacy and classification of the proxy. This is especially important for data scraping tasks, as certain proxies may not hide your internet protocol address properly. By using software designed to assess proxy anonymity, you can find out if a proxy server is exposed, hidden, or highly anonymous. This helps in selecting the appropriate type of proxy for your specific scraping needs, making sure that your tasks remain discreet and do not draw unwanted notice.

Additionally, assessing the speed of a proxy server is a vital consideration, notably for tasks requiring fast data retrieval. Speed tests can be conducted by calculating the speed of requests made through the proxy server compared to traditional connections. This enables you to identify the quickest proxy servers present in your inventory. Using a trustworthy testing tool can streamline this procedure, offering not just speed metrics but also performance statistics and additional information about each proxy's functionality in actual time, enabling you to refine your scraping tasks efficiently.

Testing Proxies Anonymity Levels

When employing proxy servers for various internet activities, grasping their degree of concealment is important. Proxies can be divided into 3 main categories: clear, anonymous, and premium. Clear proxies transmit along your IP address with calls, making them improper for concealment. Hidden proxies obscure your IP address but may reveal themselves as proxy servers. Premium proxies provide the maximum level of concealment, covering your IP address successfully without disclosing any details about the proxy.

To test the concealment of a proxy server, you can use a combination of IP check utilities and platforms that display your IP address. By connecting to a proxy server and visiting such a platform, you can observe if your real IP is visible or if the visible IP belongs to the proxy server. This enables for a straightforward assessment: if the visible IP is the proxy's, it suggests concealment; if your real IP is visible, the proxy is likely clear or anonymous.

Additionally, it is important to take into account the kind of proxy server you are employing, such as HyperText Transfer Protocol or Socket Secure. SOCKS proxies, especially SOCKS version 5, often provide enhanced concealment and support for various protocols. Evaluating your proxies under varied conditions, like different traffic loads or get to multiple platforms, can further help you measure their efficacy and concealment. Frequently verifying the anonymity of your proxies is vital, especially for tasks requiring secrecy and protection, such as data extraction.

Best Guidelines for Utilizing Proxies

While employing proxies for web scraping or automation, it is essential to pick high-quality proxies to ensure dependability and speed. Consider both exclusive and shared proxies depending on your needs. Exclusive proxies, although costlier, deliver enhanced performance and security, which makes them more suitable for tasks requiring steady speed and anonymity. Public proxies can be used for minor tasks but frequently encounter issues with dependability and speed because of shared usage.

Regularly verify your proxies to confirm they are operating correctly. Use a high-quality proxy checker that can swiftly test the proxies for speed, anonymity, and if they are still active. Speed verification is vital because a lagging proxy can lead to delays in your scraping tasks, while a inactive proxy can cause your automation process to fail. Using a proxy verification tool helps keep an optimal list of functional and quick proxies.

Finally, respect the conditions of service of the websites you are scraping. Overusing proxies or scraping too aggressively can lead to IP bans or troubles. Implement rate limiting in your scraping programs to replicate human-like behavior and avoid being flagged by the target site. Understanding fastest proxy scraper and checker between HTTP, SOCKS4, and SOCKS5 proxies will also assist in picking the correct type for your specific scraping needs, ensuring that you remain compliant and effective.

Comparing Complimentary vs Paid Proxies

When choose among free and paid proxies, it's crucial to comprehend the primary differences in reliability & performance. Complimentary proxies can be alluring due to their availability and no cost, however they often experience issues such as slower velocity, increased downtime, and less safety. Many free proxy services are used by numerous users at the same time, which can lead to unreliable functionality and a higher chance of being blacklisted by target websites.

Conversely, paid proxies typically offer enhanced functionality and dependability. They are usually dedicated resources, meaning you won’t have to distribute the IPs with a large number of other individuals. This exclusivity usually results in quicker speeds and more consistent connections, allowing successful web scraping and automation tasks without interruptions. Additionally, numerous paid proxy services contain functionalities like IP rotation, that additionally improves privacy and reduces the chance of being identified.

In the end, the choice among complimentary and premium proxies is based on your specific needs and financial plan. Should you only require a proxy service for light browsing or testing, a complimentary service may be enough. Nonetheless, for serious data collection or commercial applications requiring reliable functionality, investing in a paid proxy is likely the better option, guaranteeing efficient and effective data extraction processes.

Assessing Proxy Privacy: Crucial Techniques and Tools

6 de Abril de 2025, 9:47 - sem comentários aindaIn the digital age, ensuring anonymity online has grown to be vital for both individuals and businesses. Whether you are scraping data, performing market research, or simply seeking to improve your privacy, comprehending how to efficiently use proxies is key. Proxies serve as middlemen between your device and the web, allowing you to surf while masking your actual IP address. Yet, not all proxies are created equal. It's important to test proxy anonymity and determine their effectiveness in to maintain a safe online presence.

This article will examine key methods and tools for testing proxy anonymity. We will investigate various types of proxy scrapers and verifiers, how to create quality proxy lists, and the variances between various protocols such as HTTP, SOCKS4, and SOCKS5. Whether you are looking for a fast proxy scraper for web scraping or seeking the best free proxy checker for 2025, we will provide you with the insights needed to enhance your online activities. Join us as we navigate through the top practices for finding and confirming high-quality proxies suited for your requirements.

Understanding Types of Proxies

Proxies act as middlemen connecting clients and the internet, allowing for multiple kinds of inquiries and responses. The most common types of proxy servers are Hypertext Transfer Protocol, Hypertext Transfer Protocol Secure, SOCKS version 4, and SOCKS version 5. HTTP proxy servers are crafted particularly for internet traffic, handling requests made via browsers. HTTPS proxies provide an extra layer of security by securing the information exchanged, making them a preferred choice for maintaining confidentiality during browsing.

On the flip side, SOCKS, which come in formats 4 and 5, are generally flexible. SOCKS4 supports TCP connections and is appropriate for simple tasks, while SOCKS version 5 improves capabilities by allowing both TCP and UDP protocols. This feature renders SOCKS version 5 proxies perfect for activities that require a wider variety of uses, including video streaming and data transfers. Understanding these differences is crucial for selecting the appropriate proxy for specific requirements.

When considering proxy servers for activities like web scraping or automation, differentiating among private and shared proxy servers is essential. Dedicated proxies offer exclusive access to a single user, offering better performance, safety, and reliability. Shared proxies, while often free and readily accessible, can be slower and less secure due to multiple clients sharing the same IP location. Knowing the categories of proxy servers and their features helps users choose the best option for their tasks.

Key Techniques for Proxy Scraping

Regarding proxy scraping, one of the most effective techniques is using specialized proxy scrapers designed to gather proxies from multiple sources on the internet. Such tools can be set up to focus on specific websites known for free proxy lists, and they can automate the collection process to save resources. A reliable proxy scraper should support both HTTP and SOCKS proxies, providing a wide range of options for users based on individual needs.

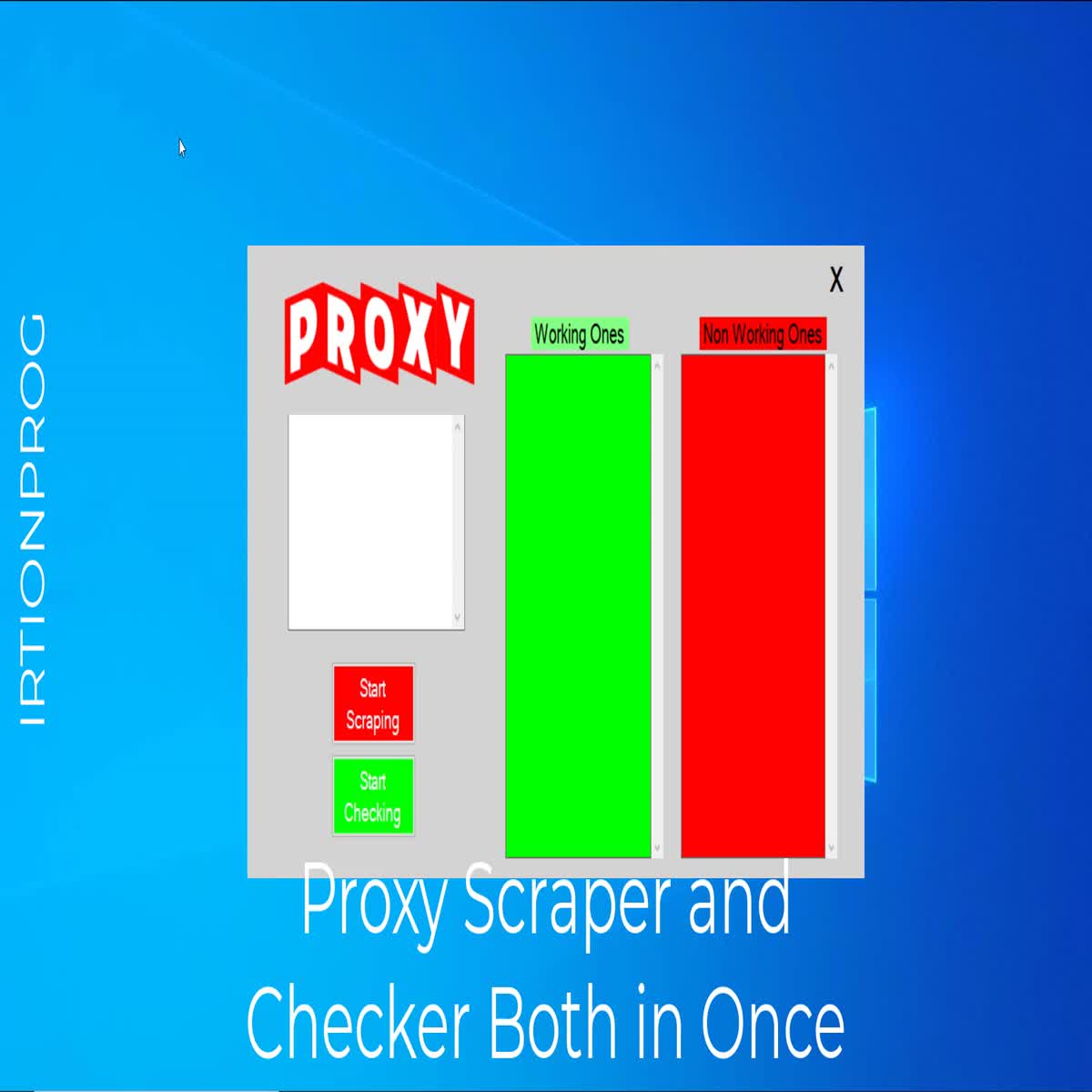

Another key method is to validate and check the proxies obtained. This involves using a reliable proxy checker or verification tool that tests each proxy for anonymity, speed, and the capability to handle requests successfully. The best proxy checkers can provide real-time results and classify the proxies as public, private, or anonymous, which is essential for optimizing the performance of web scraping tasks and avoiding bans.

Moreover, it's crucial to stay informed on the quality and sources of proxies. Utilizing online tools and forums focused on sharing proxy lists can help find high-quality proxies. Regularly checking the status of known proxy lists and employing a mix of free and paid options will enhance the overall effectiveness of proxy scraping. By combining these techniques, users can create a strong strategy for gathering and utilizing proxies for different applications such as web scraping and automation.

Evaluating Proxy Server Anonymity

When utilizing proxy servers in browsing or web scraping, understanding the anonymity of proxies is critical. Proxy servers fulfill different functions, such as hiding your real IP number and overcoming geo-based restrictions. Yet, not all proxies provide the identical level of anonymity. The three main types of proxies are transparent, hidden, and elite proxy servers, each offering different levels of protection. Transparent proxies expose your IP number to the destination website, but hidden proxy servers hide it but can still be identified. High-level proxies deliver the utmost level of obscurity, making it nearly difficult for sites to detect the user.

To determine the level of anonymity a proxy server provides, individuals can employ several methods. One frequently used method is to test the proxy server's performance through multiple privacy tests offered online. These tests check the header responses returned by the proxy server. Tools that conduct a comprehensive examination can reveal whether the proxy server is transparent or hidden based on the data it transmits. Additionally, performing speed tests together with these evaluations can help determine whether the proxy's speed meets the requirements of the specified activity.

When selecting proxies, it is crucial to consider their origin and credibility. Many public proxies may assert to be hidden but can be compromised or compromised. Private proxies, typically available for a cost, generally provide superior security and stability. Users should utilize trustworthy proxy checkers that can confirm both the anonymity and speed of the proxies. This meticulous assessment ensures that you maintain privacy while efficiently using proxies for data extraction, SEO tasks, or automation processes.

Best Proxy Solutions and Assets

When diving into proxies, possessing the right tools and resources is crucial for effective web scraping and anonymity testing. Proxy scrapers are at the forefront, allowing users to compile collections of proxy servers quickly. A few notable options consist of free proxy scrapers that offer entry to proxy lists without no cost, while fast proxy scrapers permit individuals to collect high-speed proxies in a timely manner. Tools such as ProxyStorm also offer extensive solutions for scraping proxies tailored to customer needs, making it easier to uphold an up-to-date and dependable proxy list.

Validation and verification of proxies are similarly important, which is where proxy verification tools come in. The best proxy checkers can help verify whether proxies are working, checking their speed and privacy levels. Whether users require an HTTP proxy checker or a SOCKS proxy checker, there are tools designed specifically to deliver reliable information about the proxies in use. For those keen in performance, the best free proxy checkers for 2025 are developing as strong tools to ensure proxies meet performance standards before use.

Ultimately, discovering top-notch proxies is key for optimizing web scraping performance. To help this, online proxy list generators can help individuals create custom lists tailored to specific requirements. Additionally, a range of SEO tools with proxy support can facilitate in streamlining tasks while using both dedicated and shared proxies. Grasping the distinctions between HTTP, Socket Secure 4, and SOCKS5 proxies can also direct individuals in selecting the optimal options for their individual applications, ensuring they collect data efficiently while maintaining privacy on the internet.

Best Tips for Online Scraping with Proxy Servers

As involving in web scraping, it is essential to choose the best proxies to ensure both effectiveness and protection. Utilizing a combination of exclusive and shared proxies can help equilibrate the requirement for anonymity and performance. Dedicated proxies typically offer better performance and dependability, while public proxies can be used for minor tasks. It’s recommended to consistently rotate proxies to diminish the chance of IP bans and maintain a steady scraping rate. Tools like proxy collecting tools and lists of proxies can facilitate the operation of finding and overseeing proxies for your endeavors.

A further essential aspect is to track the functionality of your proxies. Using a dependable proxy checker or proxy testing tool helps confirm velocity and privacy levels. Frequently assessing proxies allows you to detect lagging or non-functional IPs before they impede your scraping efforts. Additionally, understanding the distinctions between types of proxies, such as Hypertext Transfer Protocol, Socket Secure 4, and Socket Secure 5, can enhance your scraping strategy, permitting you to choose the most suitable option for your individual needs.

In conclusion, honoring the target website's policies is essential. Consistently apply polite scraping techniques, such as setting appropriate crawl delays and being mindful of the data extraction limits. This not only helps eliminate IP bans but also ensures a responsible approach to data scraping. By following these effective practices, you can achieve successful web scraping while lessening interference to the target site's operations.

Free versus Free Proxy Services

When considering proxies for web scraping or online anonymity, one key decision is whether to use free or premium proxy solutions. Free proxies often attract users with the promise of zero cost, making them an appealing option for individuals or small projects with limited budgets. However, these no-cost services usually come with significant disadvantages, such as reduced speeds, diminished reliability, and a increased risk of being blacklisted. Additionally, no-cost proxies may lack strong security features, exposing users to potential information breaches or malicious activities.

On the flip side, premium proxy services offer numerous advantages that can greatly enhance user experience and results. They typically provide faster connection speeds, more consistent uptime, and a bigger pool of IP addresses to choose from. Premium proxies can also offer better anonymity and privacy safeguards, reducing the chances of getting detected and blocked by websites. Many premium services offer additional features like advanced geo-targeting, dedicated IP addresses, and customer assistance, making them a more appropriate choice for dedicated web scraping and automation tasks.

Ultimately, the decision between free and premium proxy services depends on your specific requirements and situations. If your project requires high accessibility, security, and efficiency, investing in a trusted paid proxy service may be the best choice. On the flip side, for casual individuals or those just trying out with proxies, beginning with free options can provide a basic understanding of how proxies work, with the possibility to move to paid services as requirements evolve.

Emerging Trends in Proxies Solutions

The field of proxy technology is constantly evolving, with progress targeted at improving security and privacy. One notable trend is the shift towards definitely sophisticated encoding methods, which strive to safeguard user details while utilizing proxy services. As security worries grow, both individuals and organizations will more and more demand proxy solutions that offer reliable security features, making it essential for providers to stay ahead of the curve by integrating advanced security techniques.

Another growing trend is the incorporation of machine intelligence and machine learning in proxy scraping and validation tools. AI algorithms can improve the efficiency of scraping tools by automating the detection of top-tier proxies and removing unreliable ones. This advancement also enables improved analysis of the performance of proxies, allowing individuals to rapidly identify the best proxy solutions for their needs. As a result, we can anticipate observing enhanced proxy verification tools that employ AI for faster and better results.

Finally, the transition towards distributed proxies is increasing, driven by the requirement for more transparency and authority in digital interactions. Collaborative systems allow individuals to share their unused bandwidth, creating a highly robust and democratic network of proxy solutions. This not only improves secrecy but also tackles issues related to control and service of proxies. As this movement continues, users will have expanded alternatives for accessing premium, private proxy services without only depending on standard companies.

Public versus Proxy Servers: Everything You Should to Know

6 de Abril de 2025, 9:24 - sem comentários aindaIn the digital age, anonymity and privacy have become crucial, particularly for individuals involved in web scraping, data extraction, and the automation process. At the core of maintaining this privacy are proxy servers, tools that act as intermediaries between the user and the internet. When it comes to proxies, you often hear words like both public and private proxies. Comprehending the distinctions between these two categories is essential for anyone looking to navigate the web securely and efficiently.

Public proxies are generally available to the public and are often offered for free, making them an accessible option for casual internet users. However, their public availability can lead to problems such as slow performance, inconsistent reliability, and possible security threats. On the flip side, private proxies offer exclusive resources, enhancing privacy and speed at a higher price point. In this article, we will explore the subtleties of public and private proxies, delve into important tools like proxy scrapers and checkers, and provide guidance on how to identify superior proxies for your unique requirements. Whether you are just starting out trying to scrape proxies for free or an advanced user seeking the best tools for extracting data, this comprehensive guide will cover all that you need to understand.

Comprehending Proxies

Proxy servers serve as middlemen between users and the web, allowing for disguise and safety in digital interactions. When a client connects to a proxy server, their queries are channeled through that proxy server, which hides their IP address. This allows individuals to access restricted content, enhance privacy, and improve security by stopping direct exposure to dangerous websites or malicious entities.

There are different types of proxy servers, including Hypertext Transfer Protocol, SOCKS4, and SOCKS version 5, each fulfilling specific requirements. HTTP servers are particularly designed for internet traffic, while SOCKS proxies provide a more flexible solution that can handle different types of data flows, including HTTP and File Transfer Protocol. SOCKS5, in specific, offers improved features such as capability for authentication and improved performance, making it a popular choice for individuals seeking better management and reliability.

The separation between shared and private proxies is important for users, especially in jobs like web scraping and automating tasks. Public proxy servers are often without charge and widely available, but they can be less trustworthy and slower due to overuse by various users. In contrast, dedicated proxies are typically subscription-based services that offer individual access, higher speed, and improved privacy, making them preferable for more serious applications where efficiency and safety are critical.

Open vs Dedicated Proxies

Open proxies are resources that are available to everyone on the web. They are often complimentary or very low cost, which makes them an appealing option for occasional users or those who need to collect proxies for quick tasks. However, since these proxies are available to everyone, they tend to be more unstable and more prone to outages, slow speeds, and security issues. Many users may encounter IP blocks or throttling when utilizing open proxies for web scraping or automated tasks, as they are used by many individuals at the same time.

In contrast, private proxies are reserved to a specific user or entity. how to verify proxy speed provides a far more stable and safe connection, making them perfect for activities that require consistent performance, such as large-scale data extraction or web automating. Private proxies typically offer better privacy and speed, as their restricted user base results in lower traffic. how to check if a proxy is working seeking to carry out critical web scraping or require specific geographic locations may find private proxies to be the superior choice.

The decision between public and private proxies eventually depends on your needs and budget. If you are looking for performance, dependability, and privacy, dedicated proxies are justifiable the investment. On the other hand, if you are experimenting with proxy scraping software or only need proxies for temporary tasks, open proxies may meet your needs. Understanding the differences can help you choose the best proxies suited for your specific applications.

Finding and Scraping Proxies

As you are seeking to locate and extract proxies, various approaches and resources can enhance the task. One effective method is using a complimentary proxy scraper that automatically gathers existing proxies from various sources on the internet. These scrapers are designed to scan websites and forums which list proxies, pulling out live and working entries. Tools like ProxyStorm can expedite this operation and ease the retrieval to a broader range of proxies, which can be crucial for tasks that require various IP addresses.

Once you have a list of potential proxies, it is crucial to verify their performance and speed. This is the point at which a reliable proxy checker is essential. By running your collected proxies through a validating tool, you can determine whether they are operational, their response times, and their anonymity levels. Utilizing the top free proxy checker out there for 2025 will ensure you only retain high-quality entries in your final list. This validation step is crucial as not all scraped proxies are certain to be functional or appropriate for your specific needs.

If you're interested in more advanced techniques, proxy scraping with Python is a popular method for automated the process. Python offers packages and frameworks that can simplify both scraping and validating proxies. Through writing bespoke scripts, users can adjust their scraping methods to focus on particular websites and filter proxies based on their desired attributes, such as HTTP, SOCKS4, or SOCKS5 types. This enables a more customized approach in locating high-quality proxies for tasks such as web scraping and automation.

Proxy Connection Performance and Confidentiality Evaluation

When employing proxy servers, assessing their performance is crucial to ensure maximum efficiency, especially for data extraction or automation tasks. Individuals can take advantage of various proxy verification tools to evaluate the latency and latency of proxy servers. Fast scraping applications often feature with capabilities that not only gather lists of proxies but also enable you to verify the speed of each entry. This method helps to eliminate inefficient and non-functional proxy servers, which results in more effective web scraping and online navigation.

Confidentiality is also important factor when selecting proxy connections, especially when managing private information or bypassing regional limits. Diverse proxies offer divergent levels of confidentiality, spanning from visible to high anonymity. Verifying the confidentiality of a proxy server can be accomplished using proxy verification tools that check for HTTP headers, information leaks, and additional signs. Determining whether a proxy server is HTTP-based, a SOCKS4 connection, or SOCKS version 5 can also influence its privacy and functionality, making it vital to check these characteristics during your assessment.

To successfully evaluate both velocity and confidentiality, using a mix of testing tools is suggested. Applications specifically designed for proxy scraping can facilitate the gathering of reliable proxies, while the top assessment applications can help determine their performance metrics. By conducting thorough tests, users can locate reliable connections that improve their data extraction and scripting actions, providing a more seamless and more safe interaction.

Best Proxy Tools for Web Scraping

When considering web scraping, picking the right proxy tools is crucial for effective data extraction. Among the top tools, Proxify is notable for its user-friendly interface and strong features. It offers a dependable proxy scraper that effectively gathers premium proxies from multiple sources, ensuring you can access the data you need without interruptions. This tool is notably beneficial for those looking to scrape data without facing blocks by target websites.

In addition, using a proxy checker is important for validating the functionality and speed of the proxies you gather. The best proxy checkers allow users to easily test various proxies at once, confirming they are working as intended. These tools typically provide detailed information about the proxies' performance and security measures, helping you pick the most suitable ones for your web scraping tasks. An excellent choice for this is the leading free proxy checker for 2025, which combines both effectiveness and user-friendliness.

Ultimately, incorporating a proxy list generator online into your scraping workflow can simplify the process of finding and handling proxies. These generators can produce large lists of complimentary proxies, allowing you to easily integrate them into your scraping projects. They are particularly useful for web scraping with Python, where the ability to quickly access trustworthy proxies can substantially enhance your automation efforts and improve general scraping performance. By utilizing these tools, you can optimize your web scraping endeavors and secure consistent access to data streams.

Paid vs Paid Proxy Services

While looking into proxies for your online tasks, it's crucial to know the differences in between no-cost and paid choices. Free proxies are commonly attractive due to their no price, but they are associated with significant drawbacks. Most free proxies have limited reliability and can experience subpar performance, such as slow rates and excessive delays. Additionally, these proxies are typically shared by many individuals, which can lead to service interruptions and privacy issues, as they may log your data or put you to harmful activities.

On the other hand, paid proxy services provide a higher quality and safer choice. These proxies typically offer better speed and reliability, as well as exclusive connections, which minimizes the chance of being blocked or flagged by sites. Premium proxy services often come with assistance and various options like proxy rotation and privacy protection. This makes them perfect for tasks that require steady performance, including web scraping and SEO activities.

In the end, the decision of free and paid proxy services relies on your specific needs. For casual browsing, free proxy services might be sufficient. However, for activities like web scraping, data extraction, or any activity that needs anonymity and speed, purchasing a good premium service provider is generally the superior option. It can save you time and frustration, allowing you to focus on your primary objectives without the restrictions of free proxies.

Conclusion

Within the world of web scraping and data extraction, comprehending the differences among public and private proxies is essential. Open proxies, although complimentary and readily accessible, often come with limitations such as sluggish speeds, lower security, and higher risks of being banned. Conversely, dedicated proxies provide superior performance, better anonymity, and greater reliability, making them a favored choice for professional web scrapers and automated tasks.

Choosing the right proxy solution hinges on your specific needs. If you are starting out or conducting less demanding scraping tasks, a free proxy scraper may suffice. Nonetheless, as your needs grow and you seek to scrape high-quality data or streamline processes, purchasing a quick proxy scraper or a reliable proxy verification tool becomes essential. Always evaluate the speed and anonymity of your proxies to guarantee optimal performance.

Finally, whether you choose open or private proxies, the key is to utilize the existing tools efficiently. By using the appropriate proxy list generator and checking utilities, you can manage the challenges of proxy usage, making certain that your web scraping endeavors are successful and productive.

Steps: How to Verify if a Proxy Connection is Working

6 de Abril de 2025, 9:10 - sem comentários aindaIn today's virtual world, the demand for privacy and information protection has driven many users to explore the world of proxy servers. Whether you are conducting web data extraction, managing SEO tasks, or just looking to secure your online activities, knowing how to verify if a proxy is working is important. Proxy servers act as bridges between your computer and the internet, allowing you to mask your IP number and access content that may be blocked in your area. However, not all proxies are identical, and a malfunctioning proxy can hinder your attempts and lead to frustrations.

This guide will take you through a detailed journey to verify that your proxies are functioning properly. We will cover various tools and methods, including scraping tools and checkers, to help you find, verify, and test your proxy setup. Additionally, we will cover key concepts such as the differences between HTTP, SOCKS4, and SOCKS5 proxies, and how to determine the speed and privacy of your proxies. By the end of this article, you'll be fully prepared with the knowledge to effectively manage your proxy usage for web scraping, automation, and more.

Comprehending Proxies

Proxy servers act as go-betweens between internet users and the internet, allowing for increased confidentiality and safety. Whenever you connect to the internet through a proxy server, your queries are directed through the proxy server, which hides your IP address. This makes it challenging for websites and online services to track your web surfing activity, providing a layer of anonymity vital for various internet activities.

There are various types of proxy servers, including HyperText Transfer Protocol, SOCKS Version 4, and SOCKS5, each serving specific functions. HTTP proxies are typically used for internet traffic and are optimal for general browsing, while SOCKS support a larger range of protocols, making them appropriate for applications like sharing files and online gaming. Understanding the differences between these kinds helps in selecting the correct proxy for certain needs.

Using proxies effectively necessitates comprehending how to verify if they are functioning properly. This involves using a proxy checker to assess their speed, anonymity, and reliability. With a variety of options available, including quick scraping tools and specific proxy checkers, individuals can make sure they are utilizing high-quality proxies for tasks such as web scraping, automation, and extracting data.

Introduction of Proxy Server Scraping Tools

Proxy collection software are necessary for individuals looking to gather and validate proxies for various online operations, such as content harvesting and task automation. These tools enable individuals to gather a list of available proxies from various sources, securing a constant flow of IP addresses for their work. With the increasing need for security online, a trustworthy proxy scraper can greatly simplify the task of acquiring operational proxies.

One of the most important benefits of using proxy scraping tools is their capability to screen and organize proxies based on specific criteria, such as velocity, level of anonymity, and type (Hypertext Transfer Protocol, SOCKS4, SOCKS5). For instance, a speedy proxy scraper can help detect high-speed proxies suitable for time-critical activities, while a proxy verifier can test the working state and stability of each gathered proxy. This functionality is important for individuals who use proxies for data extraction or data extraction, as it fundamentally affects their findings.

In the past few years, the environment of proxy scraping tools has developed, offering multiple options for both beginners and proficient users. Complimentary proxy scrapers are available for those with tight budgets, while advanced tools provide greater features and help for users willing to invest in advanced solutions. As the requirement for proxies continues to grow, staying current with the leading proxy sources and tools is important for effective online activities.

Methods to Collect Proxies at Free

Gathering proxy servers at free is a viable approach to access high-quality lists of proxies for free. A of the simplest techniques to do this involves use popular platforms which provide lists of free proxies. Numerous these sites keep their lists current and provide information on the proxy's type, performance, and level of anonymity. By checking these platforms, you can assemble a variety of proxy servers to test for usability later.

An alternative technique to collect proxies is through utilizing automation tools or libraries for automation. As an illustration, Python has several libraries such as Beautiful Soup and Scrapy, that can be adjusted to gather proxy data from specific websites. With a simple script that writes a basic script that requests the content from sites with proxy lists, you can gather and compile a list of available proxy servers in a few minutes, allowing for a scalable solution to collect proxies effectively.

It's important to validate the proxies you collect to make sure they are functional. Post-scraping, use a proxy testing tool to check the proxy servers for their uptime, performance, and level of anonymity. This process is essential to eliminate broken proxy servers from your list and to concentrate on those that deliver the best performance for your requirements. By consistently scraping and checking proxy servers, you can maintain a strong and reliable proxy list for your web scraping needs and automation tasks.

Evaluating Proxies Anonymity and Performance

When using proxy servers, evaluating their anonymity and speed is crucial for effective internet data extraction and automation. Privacy levels can vary significantly based on the type of proxy being used, including HTTP proxies, SOCKS 4, and SOCKS 5. To determine how private a proxy is, you can utilize online tools that show your IP address. If the resource shows your actual IP, the proxy is probably a transparent one. If it reveals a different IP, you have a higher privacy level, however it is important to test further to categorize it as elite or private.

Measuring proxy performance involves checking latency & response times. High-quality proxies will have low latency and quick latency rates, making them suitable for tasks that demand speed, like automated data extraction. One way to test performance is by using a proxy verification tool that pings the proxy server & gives data on its efficiency. Additionally, you can run basic HTTP requests through the proxy & time the time taken to obtain a reply. This allows you to contrast various proxies & spot the quickest ones.

Evaluating both anonymity and performance should be an ongoing process, especially when scraping over time. Proxy quality can change over time due to multiple variables, including server load & network changes. Regularly employing a proxy verification tool ensures that you keep a proper proxy list for your requirements. By merging both evaluations, you can effectively filter out poor-quality proxies, ensuring maximum performance for your internet data extraction or automation tasks.

Deciding the Optimal Proxy Options

When it comes to finding the ideal proxy sources, it is essential to consider your unique requirements and cases. Certain proxies are more effective for web scraping, while others may be more effective for tasks such as automated tasks and browsing. Look for providers that supply a reliable mix of both types of HTTP and SOCKS proxies, as this will boost your flexibility in utilizing different applications. Make sure that the source you opt for has a reputation for high-quality service and user satisfaction.

An additional crucial aspect is the regional diversity of the proxy services. Should your data extraction or automation tasks demand access to region-specific content, you should prioritize providers that supply proxies from multiple countries and locations. This will help you navigate geolocation limitations effectively and ensure that your web scraping efforts yield the desired results avoiding being blocked. Always verify the authenticity of the proxy provider to avoid potential issues such as IP bans or low-speed connections.

To wrap up, think about the cost structure of the proxy providers. Certain providers have free proxies while others may have subscription plans. Free proxies can be enticing, but they often come with drawbacks such as speed and reliability. Paid proxies typically provide superior performance, confidentiality, and customer assistance. Evaluate your financial plan and weigh the benefits of free versus premium options before deciding, as spending on top-notch proxies can significantly boost your overall success in web scraping and automation tasks.

Utilizing Proxy Servers for Data Extraction from the Web

Web scraping is a robust technique to gathering data from websites, but it often comes with difficulties, particularly when it comes to accessing data without being blocked. This is where proxy servers come into play. A proxy functions as an buffer between your web scraper and the target website, allowing you to make information requests without exposing your actual IP address. This anonymity helps to reduce IP bans and ensures that your scraping efforts can continue uninterrupted.

When deciding on proxies for web scraping, it's important to consider the kind of proxy that best fits your requirements. HTTP proxies are often used for extracting data from websites, while SOCKS proxies offer more versatility and can handle different types of traffic. Additionally, the performance and dependability of the proxies are key, as slow connections can hinder your scraping performance. Utilizing a proxy checker can help you verify the functionality and speed of your proxy list before initiating large scraping tasks.

Furthermore, the ethical aspects of web scraping should not be neglected. It's essential to honor the terms of service of the websites from which you are scraping data. High-quality proxies can help in this aspect by allowing you to distribute your requests across various IP addresses, reducing the probability of being flagged as notable. By strategically using proxies, you can enhance your web scraping capabilities while following best practices.

Common Issues and Troubleshooting

When using a proxy, one common issue that arises is failure to connect. This can occur for several causes, such as the proxy being down, mistaken proxy configuration, or network barriers. To resolve it, first ensure that the proxy address and port are set up properly in your tool. If the settings are correct, check the status of the proxy server to see if it is online. You can use a dependable proxy status checker to verify the status of the proxy.

Another problem users often experience is reduced speed. If your proxy server is not responding quickly, the problem could stem from the overloaded proxy or simply not being nearby to your location. To boost speed, consider trying out different proxy servers and using a speedy proxy finder to find faster proxies. Additionally, if employing a complimentary proxy, be aware that these tend to have less speed compared to premium proxies.

Privacy issues can also happen, where the proxy may not be sufficiently concealing your IP. To check your anonymity, use a reliable anonymity checker that checks whether your true IP address is being leaked. If the server is found to be transparent or offers insufficient anonymity, it may be best to switch to a better or private proxy source. For data extraction and automation tasks, ensuring you have a premium proxy is essential for both effectiveness and security.

Internet Data Harvesting Proxy Servers: Choosing Among Free and Premium

6 de Abril de 2025, 9:09 - sem comentários aindaIn the ever-evolving landscape of web scraping, the selection of proxies plays a vital role in ensuring successful data extraction while preserving anonymity. As both individuals and companies seek efficient methods to gather data from various sites, understanding the differences between free and paid proxies becomes imperative. While free proxies may seem appealing due to their no charge, they often come with limitations such as reduced speeds, less reliability, and increased chances of being blocked. On the other hand, paid proxies offer improved performance, better security, and greater support for users who demand high-quality data scraping.

An appropriate proxy solution can greatly influence the efficacy of your scraping activities. Whether you're a programmer looking to implement a proxy scraper in Python, a SEO expert utilizing SEO tools with proxy integration, or simply someone trying to gather data online, knowing how to navigate the world of proxies will empower you to extract information efficiently. In this article, we'll discuss the various types of proxies, the most effective tools to both scrape and check proxies, and provide advice on how to locate high-quality proxies that fit your specific needs, helping you make wise decisions in your web scraping efforts.

### Proxies: An Overview of Types and Definitions

Proxy servers act as intermediaries between a user's device and online services, allowing users to send requests while hiding their actual IP addresses. They are often used for multiple purposes, such as enhancing privacy, overcoming restrictions, and increasing anonymity while surfing or collecting data. The primary function of a proxy is to receive requests from the user, forward them to the target server, and then send the server's response back to the user.

Numerous several types of proxies, each suited for specific tasks. HTTP proxies are built for managing web traffic and function with common web protocols. Socket Secure proxies, on the other hand, can process various types of traffic, such as HTTP, FTP, and more, making them more versatile. Within these classes, there are also subtypes like SOCKS4 and SOCKS5 proxies, with the latter offering improved security features and authentication methods.

When choosing among different proxy types, it is essential to evaluate factors like speed, anonymity, and usage requirements. Private proxies, offered for individual users, provide higher reliability and security in contrast to public proxies, that can be slower and less secure due to shared usage. Grasping these variances can help users choose the right proxy solution for their web scraping and automation needs.

Free vs Paid Proxies: Advantages and Cons

Free proxies are often appealing due to their availability and lack of cost. They require no financial investment, making them ideal for casual users or those just starting with web scraping. However, the trade-off often comes at the expense of dependability, as free proxies may be slow, unstable, and overused by many users, leading to regular downtime and restricted bandwidth. Additionally, safety can be a worry, as some free proxies may log user data or insert unwanted advertisements.

On the flip side, paid proxies offer significant advantages in terms of efficiency and security. Users usually experience faster speeds and greater uptime, as these proxies are assigned and not distributed among many users. Premium services often include improved security features, such as encryption and anonymity, which are critical for sensitive web scraping tasks. Furthermore, many premium proxies allow for more personalization options, enabling users to select between various proxy types, such as HyperText Transfer Protocol, Socket Secure 4, or SOCKS5, based on their particular needs.

Ultimately, the choice between costless and premium proxies depends on the user's requirements and budget. For light usage or experimental scraping tasks, free proxies may suffice. However, for substantial data extraction projects where velocity, dependability, and safety are crucial, investing in paid proxies is usually the recommended approach. This ensures a more seamless scraping experience and reduces the likelihood of encountering issues during data collection.

Premier Applications for Harvesting and Verifying Proxy Servers

When it comes to web scraping, having a dependable and efficient proxy scraper is essential. Several popular tools available include capabilities enabling users to gather, refine, and manage proxy lists with simplicity. Tools like ProxyStorm are favored for their capability to scrape proxies from multiple sources with minimal input. A speedy proxy scraper can significantly improve your scraping efficiency, allowing you to access data more quickly. For those looking for a free proxy scraper, there are multiple options that can assist you start scraping without any preliminary investment.

Once you've collected a list of proxies, ensuring their functionality is crucial. The top proxy checker tools can check if proxies are working properly, as well as check their speed and anonymity levels. A quality proxy verification tool will assess multiple proxies at once, giving detailed information about their performance. Among the top free proxy checkers available in 2025, some focus on user-friendliness and speed, making it easy to manage and test your proxies efficiently.

In addition to scraping and verification tools, using a proxy server generator online can save time when you need to generate new proxies quickly. Understanding the difference between HTTP and SOCKS proxies is also important for selecting the right tool for your needs. Whether you're working on automation tasks, data extraction, or SEO tools with proxy support, leveraging the best tools in proxy scraping and verification will enhance your web scraping initiatives considerably.

How to Check Proxy Speed and Privacy

Verifying proxy speed is important for ensuring efficient web scraping. The first step is to choose a reliable proxy checker tool or create a custom script in Python. These tools can evaluate response times by dispatching requests through the proxy and timing how long it requires to get a response. Look for proxies that consistently show low latency, as this will significantly impact the overall performance of your scraping activities.

To assess proxy anonymity, you should check for headers that may reveal your real IP address. Use a trusted proxy verification tool that checks the HTTP headers for signs of exposure. A genuinely anonymous proxy will not reveal your original IP address in the headers sent back from the server. You can use tools that check both the public and private proxies, making sure that the proxies you select don't compromise your identity.

Merging speed checks with anonymity assessments offers a full picture of a proxy's reliability. For better verification, consider running tests at different times of the day or under different network conditions. This method helps identify high-quality proxies that can manage your scraping tasks without interruptions, ensuring that you have fast and anonymous browsing sessions.

Finding and Generating Top-Notch Proxies

When looking for top-notch proxies, it is crucial to focus on reliable sources that regularly revise their proxies lists. Many web data extraction communities and forums share handpicked lists of proxies that have been evaluated and validated for performance and privacy. Utilizing a mix of free proxy scrapers and focused web scraping tools can aid you collect a substantial list of proxies. Make sure to check the expiry dates and trustworthiness scores of these sources to make sure that you acquire proxies that are less likely to cause failed requests during scraping.

In addition to that, to depending on community-sourced proxies, think about using proxy generators and dedicated proxy scrapers. These tools often utilize cutting-edge algorithms to find and validate proxies across multiple geographic locations. Quick proxy scrapers can help you identify not only working proxies but also those that deliver the best speed and privacy levels. Don't forget to test the proxies using a dependable proxy checker to verify their speed and anonymity, ensuring that the proxies you select meet your specific scraping needs.

Finally, for extended and serious web data extraction projects, putting money in paid private proxies could be beneficial. Private proxies offer greater levels of security, reduced risk of being blocked, and superior performance compared to shared proxies. When choosing private proxies, search for services that offer ample support, a broad pool of IP addresses, and high anonymity levels. This method enables you to use proxies effectively in automated processes and data extraction tasks while lessening interferences and enhancing your scraping success.

Common Use Cases for Web Scraping Proxies

Web scraping proxies are crucial for a range of tasks, particularly in cases of large volumes of data. One frequent use case is data extraction from e-commerce sites. Analysts and businesses frequently require collect pricing information, product descriptions, and availability status from competitors. Using proxies in this situation helps to bypass rate limits imposed by websites, allowing for uninterrupted scraping without being blocked.

Additionally, a major application is in market research and sentiment analysis. Companies often extract data from social media platforms and forums to collect user opinions and trends about products or services. Proxies are essential in this process by enabling access to geo-restricted content and preventing detection by the platforms. This way, organizations can privately collect data, gaining insights that guide marketing strategies and product development.

Finally, SEO professionals utilize web scraping proxies to track keyword rankings and analyze backlinks. By scraping search engine results and competitor websites, SEO experts can discover opportunities for improvement and track their site's performance over time. Proxies ensure that these activities are carried out without being marked as bot activity, thus maintaining the integrity of the data collected for analysis.

Conclusion: Choosing the Right Option

Choosing among free and paid web scraping proxies demands careful consideration of your specific requirements and use cases. Complimentary proxies are often appealing due to the lack of expenses, but they tend to come with notable drawbacks such as reduced speed, increased failure rates, and potential issues with privacy. For occasional users or individuals just starting out, free proxies might suffice for basic tasks or testing purposes. However, for more extensive scraping operations or tasks that demand reliability and speed, paid proxies are generally a superior investment.

Paid proxies offer enhanced performance and better security features, helping ensure that your web scraping efforts are efficient and safe. A reputable provider will often provide features like proxy verification features, high anonymity, and a broader selection of geographical locations. When choosing a premium service, you can also benefit from assistance that can be essential when resolving issues related to proxy connectivity or performance. This investment is particularly worthwhile for companies or professionals who depend on web scraping for data extraction or competitive analysis.

In conclusion, the right choice depends on the balance of your budget and your requirements. If you are looking for high-quality, dependable, and quick proxies, it may be worth considering a premium option. Conversely, if you are experimenting or engaged in a single project, exploring free proxies might be a practical choice. Assess the potential benefits and downsides associated with each option to decide which proxy solution matches most closely with your web scraping goals.