Beginning at Zero to become Hero: Building a Proxy list Generator

6 de Abril de 2025, 12:15 - sem comentários aindaIn the dynamic world of data extraction and web scraping, having the proper tools at your command can make all the distinction. An essential resource for web scrapers is a robust proxy list generator. Proxies act as intermediaries between your scraping tool and the target website, allowing you to overcome restrictions, maintain anonymity, and enhance the speed of your data collection initiatives. This article will guide you through the process of developing an efficient proxy list generator, highlighting the essential components such as proxy scrapers, checkers, and verification tools.

As the demand for reliable proxies is set to rise, understanding how to properly source and verify both free and paid proxies is a valuable skill. If you are looking to scrape data for SEO purposes, automate tasks, or gather insights for research, finding high-quality proxies is crucial. We will explore different types of proxies, from HTTP to SOCKS versions, and discuss the differences and best use cases for each. By the end of this article, you will have a comprehensive knowledge of how to create your proxy list generator and employ the best tools available for successful web scraping.

Understanding Proxy Servers as well as Different Categories

Proxies serve as intermediaries between a client to the web, facilitating inquiries and replies while masking the user's true identity. They play a crucial function in data scraping, automating tasks, and maintaining anonymity on the internet. Through channeling internet traffic through a proxy, users can access content that may be restricted in their geographical region and enhance their internet privacy.

There are categories of proxies, each cater to various needs. Hypertext Transfer Protocol proxy servers are uniquely built for browsing websites, while Socket Secure proxies offer a broader variety of protocol features, making them appropriate for various kinds of traffic beyond just web browsing, such as FTP or electronic mail. SOCKS4 and SOCKS version 5 are two popular versions, with SOCKS5 offering enhanced features like UDP capability and secure login methods. Understanding these differences is essential for selecting the appropriate proxy for particular tasks.

When it comes to web scraping as well as information extraction, the difference of private and public proxies is vital. Dedicated proxy servers are allocated to a one client, offering greater degrees of security and speed, while shared proxy servers are shared by multiple users, which can lead to slower speed and increased chance of getting banned. High-quality proxies can greatly enhance the efficiency of data extraction tools and ensure effective data collection from multiple origins.

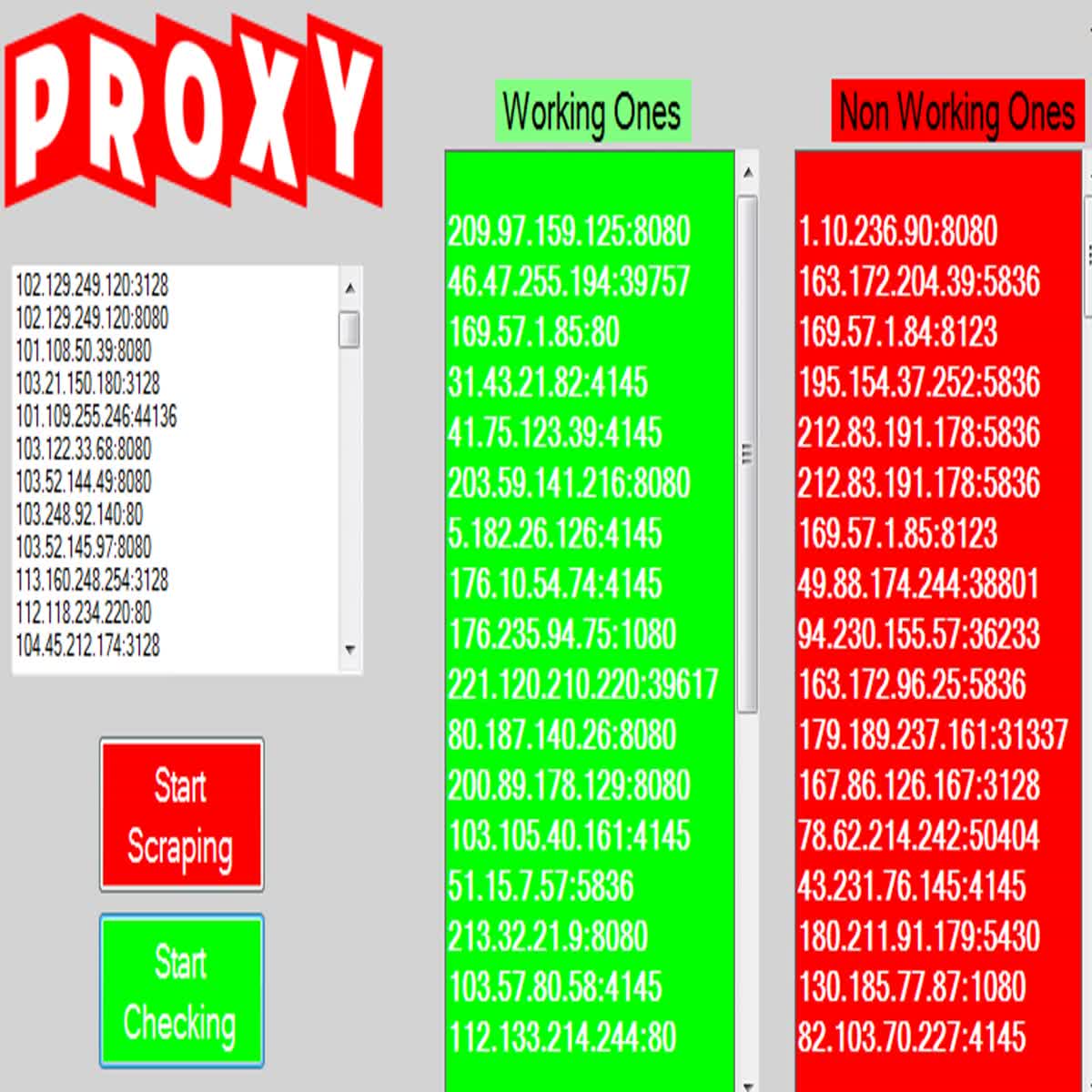

Creating a Web Scraping Tool

Creating a web scraper entails multiple phases to effectively obtain proxies from various sources. Commence by locating trustworthy sites that offer no-cost proxy servers, and provide a selection of types such as HTTP, SOCKS4, and SOCKS version 5 proxies. It’s essential to select websites that regularly revise their catalogs to confirm the proxy servers are current. Common sources for gathering proxy servers consist of online communities, Application Programming Interface services, and dedicated proxy directory websites.

When you have a collection of potential sources, you can employ programming languages like Ruby to automate the scraping process. Modules such as lxml and Requests are great for analyzing HTML and extracting data. Develop a script that downloads the webpage data of these proxy list sources and analyzes the proxy server information, such as internet protocol address and port number. Verify your scraping tool complies with the website’s terms of service, including delays and aiming to avoid triggering anti-bot measures.

After gathering the proxy data, the next stage is to refine the catalog by validating the performance of the proxies. This is where a proxy validator comes into play. Add functionality in your scraper to check each proxy’s connection status, latency, and privacy level. By making requests through proxies and measuring their effectiveness, you can filter out poor proxies, ultimately building a robust collection of reliable proxy servers for your web scraping projects.

Confirming and Testing Proxies

After you have compiled a list of proxies, the following essential step is to check their effectiveness and operation. A reliable proxy checker will help you ascertain if a proxy is functioning, quick, and fit for your intended use. Proxy verification tools can evaluate various proxies simultaneously, providing you with real-time feedback on their performance and dependability. By using a fast proxy checker, you can quickly filter out non-functional proxies, saving you hours and improving your scraping capability.

Measuring proxy velocity is vital for any web scraping task. It confirms that the proxies you choose can handle the load of your requests without hampering your workflow. When verifying proxy velocity, take into account not just the latency, but also the data transfer capability available. The top free proxy checker tools allow you to evaluate these parameters effectively, helping you to spot the proxies that provide optimal performance for your individual needs, whether you are scraping data or performing SEO research.

Another important aspect to consider is the anonymity level of the proxies in your list. Tools designed to check proxy anonymity can help you determine if a proxy is open, private, or elite. This distinction is vital depending on the type of your project; for instance, if you need evade geographical restrictions or dodge detection by online platforms, using high-privacy proxies will be advantageous. Knowing how to assess if a proxy is working under various conditions further aids in maintaining a reliable and productive scraping strategy.

Leading Tools for Proxy Server Scraping

Regarding proxy scraping, choosing the right tools can substantially improve your efficiency and effectiveness. One of the top options is the ProxyStorm tool, known for its consistency and performance. This tool features a simple interface and supports the scraping of both HTTP and SOCKS proxies. With its advanced features, users can easily automate the workflow of collecting free proxies, guaranteeing they have a current list ready for web scraping.

Another superb choice is a no-cost proxy scraper that enables users to collect proxies without spending a dime. Tools like these often come equipped with native verification capabilities to check the viability of the proxies collected. SOCKS proxy checker can reduce time and offer a steady flow of usable IP addresses, making them an perfect option for those just starting or with limited funds. Additionally, features such as filtering proxies based on location or anonymity level can boost the user experience.

For serious web scrapers, integrating different tools can yield superior results. Fast proxy scrapers that emphasize quickness and performance paired with high-quality proxy checkers can help users harvest and verify proxies faster than ever before. By employing these resources, web scrapers can keep a strong pool of proxies to bolster their automation and data extraction efforts, guaranteeing that they have access to the highest quality proxy sources for their specific needs.

Best Sources for Free Proxies

As you searching for free proxies, an effective method is utilizing online proxy lists and directories. Sites including Free Proxy List, Spys.one, and ProxyScrape maintain thorough and frequently updated databases of free proxies. These platforms categorize proxies according to various parameters such as speed, anonymity level, and type, which can be HTTP or SOCKS. By utilizing these resources, users can quickly discover proxies that cater to their specific needs for web scraping or browsing while still being affordable.

Another great source for free proxies is community-driven platforms that allow users to share their own proxy discoveries. Platforms such as Reddit or specialized web scraping communities often feature threads dedicated to free proxy sharing. Engaging with these communities not only yields fresh proxy options but also allows users to receive immediate feedback on proxy quality and performance. This interactive approach can help filter out ineffective proxies and highlight top-notch options.

In conclusion, utilizing web scraping tools designed for collecting proxies can be a game-changer. Tools like ProxyStorm and dedicated Python scripts can automate the process of scraping free proxies from different sources. By executing these scripts, users can compile fresh proxy lists tailored to their needs. Additionally, such tools frequently include features for checking proxy performance and anonymity, making them essential for anyone looking to quickly obtain and validate proxies for web scraping tasks.

Using Proxy Servers for Web Scraping and Automated Tasks

Proxies play a key role in web scraping and automation by enabling access to specific websites while mimicking multiple IP addresses. This feature is essential for bypassing rate limits and eluding IP bans that can occur when scraping data heavily. By cycling through a pool of proxies, scrapers can maintain a uninterrupted flow of requests without triggering red flags. This allows for more efficient data collection from different sources, crucial for businesses that need up-to-date information from the web.

In addition to avoiding restrictions, proxies can help maintain privacy and security when conducting data extraction. Using residential or exclusive proxies can mask the original IP address, making it challenging for websites to monitor the origin of the requests. This secrecy is particularly important when scraping sensitive information or competing with other scrapers. Moreover, utilizing proxy servers can enable access to location-based content, increasing the scope of data that can be scraped from various regions and markets.

When performing automated tasks using proxies, it is crucial to choose the suitable types of proxies based on the distinct use case. Web proxies are suitable for standard web scraping tasks, while SOCKS proxies offer greater versatility and support for multiple protocols. Many web scraping tools come integrated with integrated proxy support, making it more convenient to configure and administer proxy switching. By leveraging the right proxy choices, users can boost their data extraction efficiency, increase success rates, and refine their automated task processes.

Tips for Finding High-Quality Proxies

As you are looking for premium proxies, it's important to focus on established sources. Look for recommended proxy services that specialize in offering dedicated and exclusive proxies as they usually offer more trustworthiness and privacy. Internet communities and groups centered around web scraping can also deliver useful insights and suggestions for credible proxy options. Be wary of no-cost proxy lists, as they often feature subpar proxies that can detract from your web scraping tasks.

Checking is crucial in your quest for premium proxies. Use a reliable proxy checker to test the performance, anonymity, and location of different proxies. This will aid you remove proxies that do not satisfy your standards. Additionally, consider use proxies that work with popular standards like HTTP or SOCKS5, as they provide superior support for multiple web scraping applications and processes.

In conclusion, monitor an alert on the proxy's availability and response time. A proxy with superior uptime ensures consistent access, while minimal latency provides faster response times, which is essential for web scraping. Regularly revisit your proxy list to ensure you are using the optimal proxies at hand. By integrating these strategies, you can markedly improve your odds of discovering the top-tier proxies essential for successful web scraping.

Utilizing the Speed of Proxies for Efficient Data Collection

6 de Abril de 2025, 12:15 - sem comentários aindaIn information-driven world, the demand for efficient data collection methods has turned into more pressing than ever. With the vast amount of information available online, harnessing the power of proxies can significantly enhance your ability to gather data swiftly and discreetly. Proxies serve as go-betweens that allow users to send requests to websites without revealing their identity, making them essential tools for web scraping, automation, and data extraction.

Whether you are a researcher, a marketer, or a developer, grasping the various types of proxies and their functionalities can give you a strategic advantage. From free proxy scrapers to advanced proxy verification tools, knowing how to utilize these resources effectively ensures that you can access high-quality data without facing barriers such as rate limiting or IP bans. In this article, we will discuss the best practices for sourcing and managing proxies, the differences between HTTP and SOCKS proxies, and the top tools available to improve your data collection efforts. Join us as we dive into the realm of proxies and disclose strategies to streamline your approach to efficient data gathering.

Understanding Proxy Servers

Proxies serve as intermediaries between a user's device and the web, facilitating inquiries and replies while concealing the client's true internet protocol address. This functionality enables users to safeguard anonymity and protect their online privacy. By routing traffic through a proxy server, users can access information that may be restricted in their geographical location, ensuring a broader range of available resources.

There are multiple types of proxy servers, each with unique capabilities. HTTP proxies are designed for web traffic, making them appropriate for web navigation and data extraction, while SOCKS proxy servers operate at a deeper level and can manage any type of traffic, including Transmission Control Protocol and User Datagram Protocol. Understanding the differences between HTTP, SOCKS4, and SOCKS5 proxy servers helps users select the right type for their individual needs, whether for data collection, automated tasks, or browsing.

The use of proxies has become progressively relevant with the rise of data extraction and gathering activities. As more companies seek to gather information from diverse sources, proxy servers offer a solution to the issues posed by access restrictions, IP bans, and rate limits. By leveraging the power of proxy servers, users can efficiently scrape data, test their automated scripts, and ensure that their tasks run continuously without interruption.

Categories of Proxies for Data Collection

When it comes to data collection, grasping the different types of proxies is crucial. HTTP proxies are the most frequently utilized for scraping the web. They function by redirecting the data from a web browser through the proxy server, making inquiries on behalf of the user. HTTP proxy servers are ideal for gathering data from websites that do not require login credentials. Their user-friendliness and accessibility make them a favored option among those seeking to extract data quickly and effectively.

SOCKS proxy servers, on the other hand, offer a more versatile option for data collection. In contrast to HTTP proxies, which are limited to web traffic, SOCKS proxies can handle all kinds of traffic, making them appropriate for a wider range of uses. This includes electronic mail, file sharing, and other data transmission formats. Specifically, SOCKS5 proxies provide enhanced capabilities like login options and improved safety, making them a popular option for advanced users who require consistent linkages for scraping data.

Along with these typical categories, proxy servers can also be categorized as public or private. Public proxy servers are free and easily accessible, but they often suffer from low performance and insecure connections. Private proxy servers, in contrast, are paid services that offer exclusive IPs, ensuring higher speed and superior reliability. Knowing these differences enables users to select the appropriate kind of proxy based on their individual requirements, ensuring optimal outcomes in their efforts.

Tools for Scraping and Verifying Proxies

When it comes to collecting proxies for web scraping, having the appropriate tools can significantly improve your productivity. A proxy scraper is an indispensable tool that automates the process of accumulating available proxies from multiple sources. Many users look for a complimentary proxy scraper to reduce costs while still acquiring a comprehensive proxy list. With countless options available, the best proxy scrapers offer quickness and reliability, ensuring you can get a new list of proxies quickly.

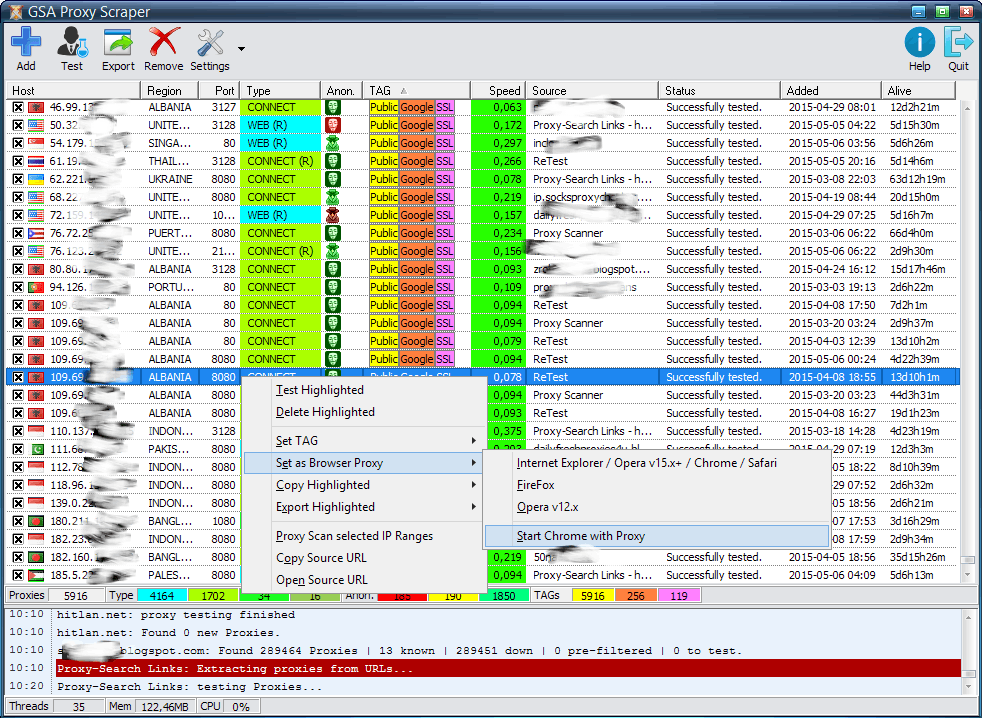

Once you have your proxy list , checking the integrity of those proxies becomes important. A proxy checker is indispensable to verify that each proxy is functional and meets your needs for speed and anonymity. The top proxy checker tools can check multiple proxies in parallel, providing users with live updates on their status. Tools like ProxyStorm shine for their comprehensive features, which allow users to check HTTP and SOCKS proxies for functionality and performance.

Comprehending the difference between proxies and how to utilize them for automation is vital. Using a proxy verification tool enables users to expand their awareness on the types of proxies available, such as HTTP, SOCKS4, and SOCKS5, and their specific uses. Knowing how to determine if a proxy is working and how to verify proxy speed can improve your web scraping efforts, whether you are using exclusive vs shared proxies. The combination of efficient scraping and robust checking tools will ultimately lead to effective data extraction.

Enhancing Proxy Management for Data Extraction

When participating in web data extraction, utilizing proxies efficiently can drastically enhance the efficiency of your data gathering activities. One key method is to create a dependable proxy inventory that features high-quality proxies designed for your individual objectives. A proxy generator online can help in assembling a collection of proxies that match your standards, whether you require HTTP, SOCKS4, or SOCKS5 proxies. Ensuring that you have both exclusive and shared proxies can provide variety in managing different scraping tasks while ensuring a harmony between efficiency and anonymity.

Another important, aspect of optimizing proxy utilization is frequent testing and evaluation of proxy speed. The most effective proxy monitoring tools can facilitate this task by permitting you to monitor the performance of the proxies in real-time. By using a proxy verification tool, you can swiftly recognize which proxies are operational and which ones require to be replaced. This ensures that your scraping activities stay efficient and productive, lessening downtimes caused by faulty proxies.

Moreover, comprehending the distinction between no-cost and subscription proxies is crucial for improving your data scraping efforts. While complimentary proxy solutions can be a fantastic starting point, they often come with restrictions regarding speed, reliability, and anonymity. In how to check if a proxy is working , spending in high-quality paid proxies can offer a substantial edge, especially in high-stakes environments. This expenditure can result in more rapid data acquisition and a reduced likelihood of being banned by your target data sources. By utilizing the most reliable proxy vendors for data extraction, you can improve your data gathering technique, which brings more meaningful findings and outcomes.

Verifying Proxies Anonymous Status and Speed

When using proxies for data harvesting, confirming their anonymous status and performance is vital to ensure efficient as well as secure data scraping. Anonymity levels can change considerably among proxy servers, and recognizing these differences—between transparent, anonymous, and elite proxy types—assists in choosing the appropriate choice for your data scraping requirements. Utilities like the best proxy checker enable users to check whether their proxy servers are revealing their real internet protocol addresses, thereby assessing the degree of virtual anonymity offered.

The speed of proxies is another essential factor that immediately influences the efficacy of your data collection efforts. A rapid proxy evaluation tool can discover high-speed proxies that minimize response time when performing queries. It is helpful to employ a proxy verification tool that evaluates response times and permits users to evaluate different proxies quickly. This certifies that you can gather data without major delays, leading to improved effective web scraping processes.

To check proxy anonymity and speed properly, it’s recommended to utilize a combination of both Hypertext Transfer Protocol and Socket Secure proxy validators. These utilities not just check if a proxy is operational but also measure the connection speeds and level of anonymity. By focusing on proxies that deliver efficient connections while preserving solid anonymity, users can greatly improve their web scraping activities and protect their online identity during data collection.

Identifying Reliable Proxies for Data Extraction

When it comes to data scraping, the quality of proxies plays a vital role in ensuring a smooth process. To find reliable proxies, it's important to assess the kind of proxies you seek. Private proxy servers often provide superior performance and stability than public proxies. While private proxies can be expensive, they give improved anonymity and speed, making them suitable for tasks that handle confidential information or require high levels of accessibility.

Another strategy for locating quality proxies is to access well-known proxy sources. There are multiple services and services dedicated to offering premium proxy collections. Utilizing a proxy checker enables you to filter through these lists to identify proxies that satisfy your particular criteria, such as performance and reliability. Frequently updating your proxy list and verifying their operation is critical to sustaining peak performance.

In conclusion, understanding how to scrape proxies for free from trusted sources can greatly enhance your ability to extract data. Employing tools like ProxyStorm and utilizing SEO tools with proxy support can increase your productivity. Additionally, knowing the distinction between HTTP, SOCKS4 proxies, and SOCKS5 will help you pick the right proxies for your automated operations, making sure you obtain the maximum out of your data collection activities.

Top Practices for Proxy Management

Overseeing proxy servers optimally is essential for enhancing your information gathering processes. Begin by arranging your proxy arrays and classifying them based on their type, such as HTTP, and their quality, whether they are private or open. Use a dependable online proxy generator for keep your origins fresh and relevant. Consistently update your collections to eliminate any inactive proxy servers, as old proxy servers can impede your scraping velocity and efficiency.

Testing proxy servers is another essential practice. Utilize a strong proxy tester to verify that every proxy in your list is not only working but also meets your speed and anonymity requirements. The top free proxy checker in the year 2025 can assist facilitate this process, enabling you to rapidly assess the performance of your proxies. By analyzing the response times and privacy levels, you can choose the most suitable candidates for your data extraction tasks.

Lastly, reflect on the ethical implications of using proxy servers for automating tasks and data gathering. Always respect the terms of service of the sites you are scraping. Using proxies ethically—particularly when using public proxy servers—will assist preserve your credibility and secure long-term access to valuable information repositories. Finding a balance between effectiveness and ethical practices will enhance your entire strategy for managing proxies.

Proxy Verification Tools: Finding the Right Solution

6 de Abril de 2025, 12:08 - sem comentários aindaIn the modern digital landscape, accessing data efficiently and anonymously has become increasingly important. With web scraping becoming as a critical tool for businesses and developers, having the right proxy solutions is paramount. Proxy verification tools help in differentiating between reliable proxies and those that may hinder your scraping efforts. This includes ensuring that a proxy is operational to validating its speed and anonymity, understanding how to utilize these tools can significantly enhance your web scraping experience.

Selecting the right proxy scraper and checker can make all the difference when it comes to extracting data. With a plethora of options available, from free proxy scrapers to specialized tools like ProxyStorm, navigating this space can be overwhelming. This article will explore the various types of proxy verification solutions, discuss the differences between HTTP, SOCKS4, and SOCKS5 proxies, and detail the best methods for identifying high-quality proxies for your needs. Whether you are new to web scraping or looking to optimize your existing setup, this guide will deliver the insights necessary for choosing the best tools for proxy verification and data extraction.

Types of Proxy Validation Utilities

Proxy verification tools come in different forms, each designed to meet particular needs when it comes to managing proxies. A common category is the proxy scraper, which automatically scours the internet for available proxy servers. Such tools can efficiently gather a significant list of proxies from multiple sources, providing users with an abundant database to select from. A well-implemented free proxy scraper can be particularly valuable for those on a budget, as it allows users to access proxies without any cost.

Another essential category is the proxy validation tool, which verifies the status and quality of the proxies gathered. This tool evaluates whether a proxy is working, its anonymity level, and its speed. Users can benefit from the best proxy checker tools available in the market, as they help filter out unusable proxies, ensuring that only trustworthy options remain for tasks such as web scraping or automation. Premium proxy checkers can make a significant difference in the efficiency of any web-related project.

Lastly, there are specialized tools for various types of proxies, such as HTTP and SOCKS proxy checkers. Such tools focus on the specific protocols used by proxies, determining compatibility and performance based on the connection type. Understanding the difference between HTTP, SOCKS4, and SOCKS5 proxies is crucial, and using the right verification tool tailored to the type of proxy can help users optimize their web scraping efforts. By utilizing these various types of proxy validation tools, users can enhance their productivity and ensure they have access to efficient proxies.

Ways to Utilize Proxy Scrapers

Utilizing a proxies scraper is a straightforward procedure that can considerably enhance your internet scraping efforts. To commence, pick a trustworthy proxies scraper which fits your needs, for example a no-cost proxy scraper or a speedy proxy tool. These applications are created to crawl the web and gather available proxy servers from multiple sources. As soon as you choose your tool, set up it according to the kind of proxy you need, be it HTTP or SOCKS, and establish any extra settings, such as geographical restrictions or speed needs.

Once setting up your proxies tool, start the scraping process. The tool will look for proxies and generate a collection that you can afterward utilize for your tasks. Keeping track of the collection operation is wise to ensure that the tool is functioning properly and producing high-quality results. Based on the scraper you select, you may have options to narrow down proxy servers by velocity, location, and privacy status, which will help enhance your list more.

After you have your proxies list, the next step is to check and verify the proxies to make sure they function effectively. For this, a proxies verification utility is important. These applications will test your proxies for velocity, accessibility, and anonymity to make sure that they fulfill your requirements. It's vital to regularly revise and check your proxies list for optimal performance, especially if you are using them for defined purposes like SEO or automation, to prevent issues that may occur from utilizing poor-quality proxy servers.

Guidelines for Choosing Proxies

When selecting proxies for your requirements, it is essential to consider the type of proxy that matches with your goals. Hypertext Transfer Protocol proxies are commonly used for data extraction and browsing, while SOCKS proxies provide more versatility and support multiple types of traffic. The choice between SOCKS4 and SOCKS5 proxies also is significant, as SOCKS5 offers additional features like improved authentication and UDP compatibility. Grasping the specific use case for the proxy will aid narrow down the options.

Another key factor is the level of anonymity level provided by the proxies. Top-notch proxies can provide levels of anonymity from transparent to elite. Transparent proxies expose the user's IP address, while elite proxies hide both the individual’s IP and the reality that a proxy is being used. For web scraping or automation tasks, choosing anonymous or elite proxies will ensure that the operations remain covert, reducing the risk of being blocked or throttled by target websites.

Lastly, evaluating the performance and reliability of the proxies is important. A fast proxy is necessary for tasks that require immediate data scraping and smooth browsing. Checking for proxy speed and response times, as well as their uptime history, can offer insights into their performance. Make sure to use proxy verification tool s to test and ensure that the proxies you select are not only quick but also reliably available for the intended tasks.

Proxy Server Speed and Privacy Testing

When employing proxies for web scraping or automation, assessing their speed is essential. A quick proxy guarantees that your scraping operations are efficient and can manage requests without considerable delays. To measure proxy speed, use trusted proxy checking tools that provide metrics such as response time and throughput. Look for solutions that allow you to test several proxies at once to streamline your workflow. By finding the most efficient proxies, you can improve your data extraction process and ensure a steady flow of information.

Anonymity is another critical aspect to take into account when choosing proxies. Premium proxies should mask your true IP address, ensuring that your activities remain private and protected. Tools like SOCKS proxy analyzers can help evaluate the level of anonymity a proxy offers, ranging from open to elite proxies. Checking for privacy involves checking whether the proxy exposes your real IP or whether it hides it completely, which is crucial for ensuring privacy in web scraping tasks.

To successfully integrate speed and anonymity testing, look for proxy verification tools that offer all-in-one functionalities. These tools not only test the speed of each proxy but also assess its anonymity level. By utilizing a complete solution, you can quickly filter through proxy lists and choose the most suitable options for your specific needs. This method improves your web scraping efforts while safeguarding your identity online, making it easier to navigate the complexities of the internet without issues.

Best Proxy Providers for Web Scraping

When it comes to web scraping, choosing the best proxy options is important for guaranteeing optimal performance and reliability. Free proxies can be tempting for those on a budget, but they often come with challenges such as reduced speeds, unstable connections, and potential IP bans. Some of the top places to find free proxies include forums, GitHub, and dedicated proxy websites. While they can be a decent starting point, always verify their dependability through a strong proxy checker.

For consistent and quicker performance, look into using paid proxy services. These services typically offer rotating IP addresses, advanced security features, and dedicated customer support. Some reputable providers specialize in web scraping proxies, including services like other established brands in the industry. These paid proxies are made for high data volume and anonymity, which is crucial for scraping activities without attracting unwanted attention.

In besides dedicated proxy providers, you can also look into SEO tools that facilitate proxy usage. These tools frequently come with proxy list generators and built-in proxy checkers, which makes it simpler to manage your scraping tasks. By leveraging both premium and freely available resources, you can gather a diverse list of high-quality proxies to make sure that your web scraping is efficient and efficient.

Free versus Free Proxy Services

When it comes to utilizing proxy servers, a major consideration you will face is whether to opt for complimentary or paid proxy services. Free proxies can be incredibly attractive due to their zero cost and ease of access. Many users rely on free proxy scrapers to gather a list of available proxies without any financial obligation. However, free proxies typically come with significant limitations, such as decreased performance and interruptions in service. Moreover, the dependability and anonymity of free proxies can be questionable, making them less suitable for sensitive tasks like data extraction.

On the other hand, premium proxies offer distinct advantages that can justify their price. They typically provide faster connection speeds, higher uptime, and superior overall reliability. Most paid proxy solutions implement strong security measures, ensuring that your online activities stay confidential and safe. Furthermore, with options like home and dedicated proxies, users can benefit from a greater level of anonymity. This makes paid services particularly attractive for businesses and users engaged in tasks that require a stable and trustworthy proxy connection.

Ultimately, the decision between complimentary and premium proxies will be determined by your specific requirements and application scenarios. If you are conducting casual surfing or simple activities, free proxies may be sufficient. However, for users engaged in web scraping or needing consistent results, choosing a quality premium proxy solution is typically the wiser choice. It is essential to take into account the importance of proxy quality and reliability, as these elements can greatly affect your outcomes in various online pursuits.

Instruments for Facilitating Proxy Management

In the domain of web scraping and online automation, proxies usage is crucial for maintaining anonymity and bypassing restrictions. Several tools are developed specifically to streamline the process of handling proxies effectively. With a trustworthy proxy harvester, users can compile a list of sources to obtain both complimentary and premium proxies. This allows for flexibility and effectiveness, ensuring that you have availability of high-quality proxies for your particular needs.

One notable tool for streamlining proxy usage is the tool ProxyStorm, which not only offers a comprehensive proxy list but additionally features elements like a fast proxy validator. This guarantees that the proxies you intend to use are both usable and trustworthy. Moreover, users looking for a free solution can explore OSS options that enable proxy scraping and checking for anonymity and speed, offering a wide toolbox for streamlining utilization in tasks like web scraping or SEO tools with proxy support.

For those engaged with programming, employing proxy scraping code in Python can further enhance automation. By integrating these programs into your workflow, you can automate the whole process of collecting proxies, checking their performance, and handling them for web scraping or other automated processes. This synergy of tools and scripts enables a smooth operation, permitting you to focus on your core tasks while ensuring that your proxy utilization is both efficient and successful.

How to Locate Dependable Proxies for Online Data Extraction

6 de Abril de 2025, 11:13 - sem comentários aindaIn this world of web scraping, having reliable proxies is an vital component for achieving successful data extraction. These proxies can help safeguard your identity, prevent throttling by servers, and allow you to access restricted data. However, finding the right proxy solutions can often feel overwhelming, as there are countless options available online. This guide is designed to help you navigate the complex landscape of proxies and find the most effective ones for your web scraping needs.

As you searching for proxies, you'll come across terms like proxy scrapers, proxy checkers, and proxy lists. These tools are vital in sourcing high-quality proxies and guaranteeing they perform optimally for your specific tasks. Whether you are looking for free options or considering paid services, understanding how to assess proxy speed, anonymity, and reliability will significantly improve your web scraping projects. Come along as we delve into the best practices for finding and making use of proxies effectively, guaranteeing your data scraping endeavors are both efficient and successful.

Grasping Proxies for Data Extraction

Intermediaries play a crucial role in web scraping by serving as intermediaries between the scraper and the websites being scraped. When you use a proxy, your requests to a website come from the proxy IP address instead of your personal. This aids to hide your true IP and prevents the website from blocking you due to abnormal traffic patterns. By leveraging proxies, data collectors can retrieve data without showing their real IPs, allowing for more extensive and effective data extraction.

Different types of proxies serve different roles in web scraping. HTTP proxy servers are suitable for standard web traffic, while SOCKS proxy servers can handle a larger variety of traffic types including TCP, UDP, and other protocols. The decision between utilizing HTTP or SOCKS proxies depends on the specific needs of your data extraction project, such as the type of protocols involved and the level of anonymity required. Additionally, proxies can be grouped as either public or private, with private proxies generally offering better speed and reliability for scraping jobs.

To ensure successful data extraction, it is essential to find premium proxies. Reliable proxies not only maintain rapid response times but also provide a level of disguise that can protect your data collection tasks. Using a proxy verification tool can help you determine the performance and anonymity of the proxies you intend to use. Ultimately, comprehending how to properly leverage proxies can greatly enhance your web scraping activities, improving both efficiency and overall success.

Types of Proxies: HTTP

When it comes to web scraping, understanding the different types of proxies is essential for effective data collection. HTTP proxy servers are intended for processing web traffic and are often used for accessing websites or gathering web content. They perform well for standard HTTP requests and can be advantageous in web scraping when working with websites. Nonetheless, how to scrape proxies for free may face challenges with managing non-HTTP traffic.

SOCKS4 proxies provide a more versatile option for web scraping, as they can handle any type of traffic, whether it is TCP or UDP. This flexibility allows users to circumvent restrictions and access multiple online resources. However, SOCKS4 does not support login verification or process IPv6 traffic, which may limit its applicability in certain situations.

The SOCKS5 server is the most advanced type, offering enhanced security and performance. It supports both TCP and UDP, allows for authentication, and can process IPv6 addresses. This makes SOCKS5 proxies an outstanding choice for web scraping, particularly when confidentiality and secrecy are a concern. Their capacity to tunnel different types of data adds to their effectiveness in fetching data from multiple sources securely.

Identifying Trustworthy Proxy Providers

When looking for trustworthy proxy sources to use in scraping the web, it's essential to start with well-known suppliers which have a established reputation. Many individuals select for paid services which offer exclusive proxies, that tend to be greater in stability and quicker compared to complimentary alternatives. Such providers often have a good standing for server uptime and support, ensuring that users receive reliable results. Looking into user reviews and community recommendations can help locate trustworthy sources.

An additional approach is to use proxy lists and tools which gather proxies across various websites. Although there are the quality can vary significantly. To ensure that proxies are dependable, consider using a proxy checker to test their velocity and security. Focus on sources which frequently refresh their lists, as this can help you obtain up-to-date and functioning proxies, which is crucial for supporting effectiveness in web scraping activities.

In conclusion, it's important to separate between HTTP and SOCKS proxies. Hypertext Transfer Protocol proxies are usually used for web browsing, whereas Socket Secure proxies are flexible and can process multiple types of connections. If data scraping projects require enhanced functionality, investing in a SOCKS proxy may be helpful. Additionally, grasping the distinction between public and private proxies can inform your decision. Free proxies are generally no-cost but can be less secure and not as fast; whereas paid proxies, while not free, offer superior efficiency and security for data scraping requirements.

Web Scrapers: Utilities and Strategies

Regarding web scraping, possessing the appropriate tools is important for quickly acquiring data across the internet. Proxy scrapers are specifically designed to simplify the task of gathering proxy lists from diverse sources. These tools can save time and ensure you have a variety of proxies available for your scraping tasks. Using a no-cost proxy scraper can be an outstanding way to begin, but it's important to determine the standard and dependability of the proxies acquired.

For those looking to ensure the effectiveness of their scraping endeavors, employing a speedy proxy scraper that can swiftly verify the performance of proxies is necessary. Tools like ProxyStorm offer advanced features that help users filter proxies based on specifications such as velocity, level of anonymity, and type (HTTP or SOCKS). By using these types of proxy checkers, scrapers can simplify much of the verification, allowing for a more streamlined data extraction method.

Understanding the difference between HTTP, SOCKS4, and SOCKS5 proxies is also important. Each type serves different purposes, with SOCKS5 offering more versatility and supporting a broader variety of protocols. When picking tools for proxy scraping, think about using a proxy verification tool that helps determine proxy speed and anonymity. This confirms that the proxies you use are not only functional but also appropriate for your specific web scraping needs, whether you are working on SEO tasks or automated data extraction projects.

Assessing and Confirming Proxy Anonymity

When utilizing proxies for web scraping, it is crucial to verify that your proxy servers are genuinely anonymous. Assessing proxy privacy entails checking whether or not your real IP address is revealed while connected to the proxy. This can be done using online services designed to disclose your current IP address ahead of and after connecting through the proxy. A dependable anonymous proxy should hide your actual IP, revealing only the proxy's IP address to any external sites you visit.

To check the level of anonymity, you can categorize proxies into 3 types: transparent, semi-anonymous, and high anonymity. Clear proxies do not tend to hide your IP and can be readily discovered, while anonymous proxies give some level of IP concealment but may still reveal they are proxies. Elite proxies, on the other hand, provide complete masking, seeming as if they are regular users. Using a proxy checker tool allows you to figure out which type of proxy you are dealing with and to ensure you are utilizing the best option for protected scraping.

Regularly testing and confirming the privacy of your proxy servers not only safeguards your identity but also improves the efficiency of your web scraping activities. Tools such as Proxy Service or specialized proxy testing tools can facilitate this process, saving you resources and confirming that you are reliably utilizing high-quality proxy servers. Utilize these services to maintain a robust and anonymous web scraping operation.

Free Proxies: Which to Select?

When it comes to choosing proxies for web scraping, one of the key decisions you'll face is whether to use complimentary or paid proxies. Free proxies are easily accessible and budget-friendly, making them an appealing option for those on a tight budget or casual scrapers. However, the dependability and speed of free proxies can be unreliable. They often come with limitations such as lagging connections, limited anonymity, and a higher likelihood of being denied access by target websites, which can impede the effectiveness of your extraction efforts.

On the other hand, premium proxies generally provide a more reliable and safe solution. They often come with features like exclusive IP addresses, improved speed, and better anonymity. Paid proxy services frequently offer strong customer support, aiding you troubleshoot any problems that arise during your scraping tasks. Moreover, these proxies are less likely to be blacklisted, ensuring a smoother and more effective scraping process, especially for bigger and more complex projects.

Ultimately, the decision between free and paid proxies will depend on your specific needs. If you are conducting small-scale scraping and can tolerate some interruptions or reduced speeds, complimentary proxies may be sufficient. However, if your scraping operations require speed, reliability, and anonymity, opting for a paid proxy service could be the best option for achieving your goals effectively.

Best Guidelines for Utilizing Proxies in Automated Tasks

When using proxies for automation, it's crucial to change them consistently to minimize detection and bans by specific websites. Implementing a proxy rotation approach can aid distribute requests across various proxies, lessening the likelihood of reaching speed restrictions or activating protection measures. You can achieve this by utilizing a proxy list creator via the web that provides a diverse set of proxy options or adding a speedy proxy harvester that can retrieve new proxies often.

Evaluating the dependability and speed of your proxies is just as important. Employ a proxy verifier to confirm their effectiveness before you start data extraction. Tools that give detailed data on response time and return times will enable you to find the most efficient proxies for your automated tasks. Additionally, it's essential to monitor your proxies continuously. A proxy validation tool should be part of your routine to make sure you are always using top-notch proxies that stay active.

Finally, understand the distinctions between dedicated and public proxies when setting up your automation. Although free proxies can be cost-effective, they often miss the dependability and security of dedicated options. If your automated projects require reliable and protected connections, investing in private proxies may be worthwhile. Evaluate your individual needs on proxy anonymity and speed to pick the best proxies for data extraction and guarantee your operations function seamlessly.

Public vs Proxy Servers: Everything You Need to Know

6 de Abril de 2025, 10:43 - sem comentários aindaIn today's digital era, anonymity and privacy have become vitally important, notably for those participating in web scraping, extracting data, and automation. At the center of maintaining this privacy are proxy servers, which serve as intermediaries between users and the internet. When it comes to proxies, you often hear words like public and private proxies. Grasping the distinctions between these two types is crucial for anyone looking to browse the internet safely and effectively.

Open proxies are generally available to the public and are often free to use, making them an attractive option for casual internet users. However, their open nature can lead to challenges such as slower speeds, variable reliability, and potential security risks. On the flip side, dedicated proxies offer dedicated resources, enhancing privacy and speed at a premium price. In this article, we will explore the nuances of the differences between public and private proxies, delve into essential tools like proxy scrapers and checkers, and provide insights on how to locate quality proxies for your particular purposes. Whether you are new to this trying to obtain free proxies or an experienced user seeking the top tools for extracting data, this detailed guide will cover everything you need to know.

Understanding Proxies

Proxy servers serve as intermediaries between users and the web, allowing for privacy and safety in digital interactions. When a person connects to a proxy, their inquiries are directed through that proxy server, which conceals their IP address. This permits individuals to access blocked content, enhance privacy, and improve protection by avoiding direct exposure to dangerous websites or harmful actors.

There are various types of proxies, including Hypertext Transfer Protocol, SOCKS version 4, and SOCKS5, each fulfilling specific requirements. HTTP servers are specially designed for internet traffic, while SOCKS proxies provide a more flexible solution that can handle a range of types of traffic, including Hypertext Transfer Protocol and FTP. SOCKS version 5, in specific, offers improved features such as capability for user verification and improved performance, making it a favored choice for individuals seeking better control and reliability.

The difference between open and exclusive proxy servers is important for individuals, especially in jobs like web scraping and automation. Open proxies are often costless and commonly available, but they can be less dependable and less fast due to high demand by multiple users. In opposition, dedicated proxy servers are typically fee-based services that offer exclusive access, higher speed, and improved privacy, making them ideal for professional applications where performance and safety are paramount.

Public vs Private Proxies

Public proxies are resources that are accessible to anyone on the web. They are often free or very inexpensive, which makes them an appealing option for occasional users or those who need to get proxies for quick tasks. However, since these proxies are available to everyone, they tend to be less reliable and more prone to downtime, lag, and security issues. Many users may encounter IP bans or throttling when using open proxies for web scraping or automation tasks, as they are used by many individuals at the same moment.

In contrast, private proxies are reserved to a specific user or entity. This exclusivity provides a far more stable and safe connection, making them perfect for tasks that require reliable performance, such as large-scale data extraction or web automation. Private proxies typically offer better anonymity and speed, as their limited user base results in lower traffic. Users seeking to perform critical web scraping or require specific geographic locations may find private proxies to be the better choice.

The decision between public and private proxies ultimately depends on your requirements and budget. If you are looking for performance, dependability, and privacy, private proxies are worth the investment. On the other hand, if you are experimenting with proxy scraping tools or only need proxies for temporary tasks, open proxies may suffice. Understanding the distinctions can help you select the best proxies tailored for your specific applications.

Finding and Scraping Proxies

As you are seeking to locate and extract proxies, various approaches and resources can facilitate the task. One efficient method is using a complimentary proxy scraper which automatically gathers available proxies from different sources on the internet. Such scrapers are designed to comb through websites and forums that list proxies, pulling out live and working entries. Tools like ProxyStorm can speed up this process and simplify access to a broader range of proxies, which can be crucial for tasks that require multiple IP addresses.

After obtaining a list of potential proxies, it is crucial to check their functionality and speed. This is the point at which a reliable proxy checker comes into play. When you run your scraped proxies through a verification tool, you can determine whether they are operational, their response times, and their anonymity levels. Using the best free proxy checker out there for 2025 will help ensure you only keep top-tier entries in your final list. This validation step is important as not all gathered proxies are guaranteed to be functional or appropriate for your specific needs.

If you're interested in advanced techniques, proxy scraping with Python is a common method for automating the task. Python offers libraries and frameworks that can simplify both scraping and validating proxies. Through writing custom scripts, users can adjust their scraping methods to focus on specific websites and filter proxies based on their desired attributes, such as HTTP, SOCKS4, or SOCKS5 types. This enables a more customized approach in finding high-quality proxies for tasks such as web scraping and automation.

Proxy Server Speed and Anonymity Assessment

When employing proxy connections, testing their velocity is crucial to verify maximum efficiency, notably for web scraping or automation tasks. Users can leverage various proxy verification tools to evaluate the response time and latency of proxy servers. Fast scraping applications often feature with features that not only gather proxy databases but also let you confirm the velocity of each entry. This procedure helps to eliminate slow and unresponsive proxies, resulting in more effective information harvesting and internet surfing.

Confidentiality is another important factor when choosing proxy servers, notably when handling confidential data or evading location-based barriers. Various proxies offer different levels of privacy, extending from visible to elite. Assessing the privacy of a proxy can be conducted using privacy assessment tools that check for headers, IP leaks, and additional markers. Recognizing whether a proxy is a standard HTTP connection, SOCKS4, or a SOCKS5 connection can also affect its anonymity and functionality, so it is vital to validate these details during your testing.

To successfully test both speed and anonymity, using a mix of testing tools is advised. Applications specifically intended for proxy scraping can aid in the collection of premium proxy servers, while the most effective verification tools can help assess their performance metrics. By performing detailed tests, users can locate reliable proxies that enhance their data extraction and scripting actions, providing a better and more safe experience.

Best Proxy Tools for Web Scraping

When discussing web scraping, picking the best proxy tools is crucial for effective data extraction. Among the leading tools, ProxyStorm stands out for its intuitive interface and robust features. It delivers a dependable proxy scraper that quickly gathers high-quality proxies from various sources, making sure you can access the data you need without disruptions. This tool is especially beneficial for those wanting to scrape data without facing blocks by target websites.

In addition, using a proxy checker is essential for verifying the performance and response time of the proxies you gather. The best proxy checkers allow users to conveniently test various proxies simultaneously, ensuring they are working properly. These tools frequently provide detailed information about the proxies' responsiveness and security measures, helping you select the most appropriate ones for your web scraping tasks. An ideal choice for this is the best free proxy checker 2025, which integrates both effectiveness and user-friendliness.

Ultimately, incorporating a proxy list generator online into your scraping workflow can enhance the process of locating and organizing proxies. These generators can produce extensive lists of complimentary proxies, allowing you to quickly integrate them into your scraping projects. They are notably useful for web scraping with Python, where the ability to efficiently access reliable proxies can substantially enhance your automation efforts and improve overall scraping performance. By leveraging these tools, you can enhance your web scraping endeavors and secure consistent access to data streams.

Free versus Free Proxies

As you evaluating proxy services for your internet tasks, it's important to understand the distinctions between no-cost and paid choices. No-cost proxy services are often appealing due to their zero cost, but they come with major disadvantages. Many no-cost proxies have limited trustworthiness and can suffer from poor service, including laggy speeds and high latency. Additionally, these proxies are often shared among numerous users, which can result in service interruptions and privacy issues, as they may record your data or put you to malicious threats.

On the other hand, premium proxies provide a more reliable and safer option. These proxy services typically offer better speed and reliability, as well as exclusive connections, which minimizes the chance of being banned or identified by sites. Paid service providers usually come with customer support and various options like proxy rotation and privacy protection. This makes them perfect for tasks that demand steady service, including web scraping and search engine optimization tasks.

In the end, the choice of free and premium proxies relies on your specific requirements. For informal browsing, free proxies might suffice. However, for tasks like data extraction, data extraction, or any activity that needs anonymity and quickness, investing in a good premium service service is generally the better choice. It can save you wasted time and frustration, allowing you to concentrate on your primary goals free from the restrictions of free proxies.

Conclusion

Within this realm of web scraping and data extraction, understanding the differences between open and private proxies is crucial. Public proxies, while free and readily available, frequently come with drawbacks such as slow speeds, lower security, and increased risks of being blocked. Conversely, dedicated proxies offer superior performance, improved anonymity, and higher reliability, which makes them a favored choice for professional web scrapers and automated tasks.

Choosing the appropriate proxy solution depends on your particular needs. If you're starting out or performing less demanding scraping tasks, a complimentary proxy scraper may satisfy your needs. Nonetheless, as your needs grow and you aim to scrape high-quality data or automate processes, investing in a quick proxy scraper or a reliable proxy verification tool becomes crucial. Be proxy scraper for web scraping to evaluate the speed and anonymity of your proxies to ensure maximum performance.

In the end, whether you opt for public or dedicated proxies, the key is to leverage the existing tools efficiently. By using the appropriate proxy list generator and checking utilities, you can manage the complexities of proxy usage, making certain that your web scraping efforts are effective and productive.